Introduction

A virtualization cluster is a group of servers integrated into a unified computing environment through virtualization technologies. It enables the efficient utilization of hardware resources by distributing capacity among virtual machines or containers. This approach allows for the creation of a manageable, flexible, and scalable IT infrastructure. Virtualization clusters are commonly used to optimize application performance, accelerate service deployment, reduce hardware costs, and ensure high availability for mission-critical systems.

As data volumes increase, the architecture of information systems becomes more complex, and regulatory requirements grow stricter, the design of virtualization clusters is becoming an increasingly challenging task. It is essential to take into account numerous factors, including reliability, security, data storage requirements, and more. Additionally, it is important to analyze the existing infrastructure and assess potential limitations.

This article examines the fundamental approaches to virtualization cluster design, beginning with the data collection and analysis phase. It describes the key business and technical requirements, widely used methodologies, common constraints and strategies for addressing them, as well as considerations for the interaction of clusters with related systems. The approaches presented in this paper enable the development of an efficient, flexible architecture that aligns with business objectives.

Data Collection and Analysis

When designing virtualization clusters, the first step is to collect and analyze data from four main areas:

1. Information Systems (IS). The project may focus either on deploying new IS or upgrading existing ones, so it is important to analyze the requirements for both the planned and current systems. Specifically:

- Reliability requirements. The more critical the system is, the higher the availability and fault tolerance indicators must be. Downtime cost per unit of time is often used as an evaluation metric.

- Security requirements. It is necessary to consider risks associated with potential data breaches, cyber threats, and internal incidents. Defining data protection mechanisms and access levels is crucial.

- Computational power requirements. These are determined by the load on processors, RAM, and other resources. The assessment should include both current performance indicators and projected growth. Scalability is an important aspect, especially if the system is expected to grow actively.

- This defines the components of the virtualization cluster: which technologies will be used and how interaction between various system levels will be organized. For example, the cluster architecture may be centralized – where all resources are managed through a single virtualization platform–or distributed, where capacities are divided among multiple nodes.

- Data volume requirements. Similar to computational power requirements, it is important to consider not only current indicators but also projected growth.

2. Stored Data. A competent approach to data management enables optimization of storage usage, reduces the load on infrastructure, and ensures compliance with regulatory requirements. The following factors must be considered:

- Data volume and growth rates. If the system processes large amounts of data or a significant increase in data volume is anticipated, mechanisms for dynamic storage scaling should be provided, along with an archiving strategy.

- Data classification. This allows determining the impact of data on the company's business processes. For example, data can be divided into critical and non-critical categories, which influences the choice of backup, protection, and recovery methods. Categorization may be supported by evaluating the cost of data loss over a specific time period or for a specific number of users.

- Regulatory requirements for data storage. Some categories of information require special conditions, access restrictions, encryption, and long-term storage in accordance with legal regulations. This is particularly relevant for personal, medical, and financial data.

3. Existing Infrastructure Information. Often, the design of a virtualization cluster does not start from scratch (greenfield) but involves expanding or upgrading the current system (brownfield). This can impose limitations on system design. This category includes:

- System placement geography. This determines where the computing capacities will be located: in a single data center, in multiple distributed data centers, or in the cloud. It affects how replication, load balancing, and other processes will be organized.

- Software and hardware in use. Integration capabilities with existing technologies must be taken into account. For example, if the company has already deployed certain virtualization or data storage systems, their compatibility with new solutions should be verified.

- Current architecture. Similar to the previous point, if the company already uses containerization or specific types of hypervisors, this factor must be considered when designing the virtualization cluster.

4. Regulatory and Legal Requirements. In some industries, regulations govern not only data storage but also data processing. This includes rules for data transmission, encryption, user action logging, and reporting to regulatory authorities. These regulations primarily apply to healthcare, finance, and energy sectors. Failure to comply with regulations may result in significant fines and restrictions on business operations, so it is essential to address them at the early stages of design.

To collect the necessary data, it is important to conduct interviews with information system owners and heads of departments (stakeholders) on the client’s side. This will help identify priority tasks, requirements, and constraints. It is also crucial to analyze existing documentation, including technical specifications, load reports, regulatory documents, and others. As a methodological approach, TOGAF can be used: it involves identifying key stakeholders, defining issues and requirements at the stage of forming a common architectural vision [1].

Requirements Formulation

After collecting and analyzing data, the company can formulate business and technical requirements that form the foundation of the future IT architecture. These requirements can be divided into functional and non-functional categories.

Functional requirements define the tasks that the system must perform. They focus on its behavior and capabilities, describing specific operations. Such operations may include, for example, processing user requests or managing databases. Non-functional requirements refer to system design principles and are not directly related to its functionality. These include security, manageability, availability, fault recovery, and performance. They often stem from regulatory standards.

For example, under strict data protection legislation, there may be a requirement for data encryption at-rest (during storage) and in-transit (during transmission). Another example: business development goals may be translated into non-functional performance requirements. They can be formulated as follows: “The infrastructure must support the growth of users in information system X to 10,000 people while maintaining request processing times (95% of requests are processed within no more than 2 seconds).”

Ultimately, the requirements should address three key aspects [2]:

- Technical (the “what” question) – what technologies will be applied and why. It is important to note that a requirement is not the use of a specific solution (this falls under constraints), but a request that justifies the selection of a particular technology or product. For example, one set of technical requirements may lead to the choice of the VMware ESXi hypervisor, while another may favor Microsoft Hyper-V.

- Organizational (the “who” question) – who will be responsible for specific aspects of system operation, monitoring, and lifecycle management.

- Operational (the “how” question) – how operations will be performed, including deployment, updates, access management, and incident handling.

It should be noted that stakeholders formulate requirements differently. Business-oriented client representatives often focus on functional aspects, while technical specialists pay more attention to non-functional requirements. However, this is not a strict rule. For instance, business stakeholders may define the cost of downtime for an information system, which ultimately translates into availability and recovery time requirements (and the budget allocated for these aspects).

Constraints and Assumptions

In addition to requirements, constraints and assumptions arise when designing virtualization clusters. Constraints define the boundaries within which the architect must operate. These may be caused by various factors – technological, operational, or financial.

Technological constraints are often related to the need to use certain solutions. For example, a company may already have network equipment from a particular vendor deployed, and replacing it with alternatives is not feasible due to the high cost of migration or a shortage of specialists. This can affect the design of network connectivity and routing. Another common constraint is network link architecture and bandwidth. If the infrastructure is already built on a topology, some load balancing or redundancy mechanisms will not be usable.

Operational constraints arise when existing business processes prevent the introduction of new technologies. For example, if a company has a dedicated storage support department specializing in Fibre Channel (a family of protocols for high-speed data transfer), employees may resist implementing hyperconverged solutions. They require a radically different management model. In such cases, it is necessary either to adapt the project to existing competencies or to provide for staff training and process changes in advance.

Finances can also impose restrictions on the choice of hardware solutions, level of redundancy, software licensing and even scaling strategy. For example, if it is impossible to purchase premium equipment for high-performance storage within the budget, one has to look for compromise options.

In addition to constraints, there are assumptions - hypotheses about the future state of the system that have not yet been confirmed. They often relate to load growth and scaling. For example, an assumption may be made that “the number of system users will grow by no more than 20% in two years.” However, if actual growth turns out to be higher, the project may face resource constraints. Therefore, it is important to try to validate assumptions, such as load testing, analyzing historical data, and allowing for future adaptation of the architecture.

Basic Design Approaches

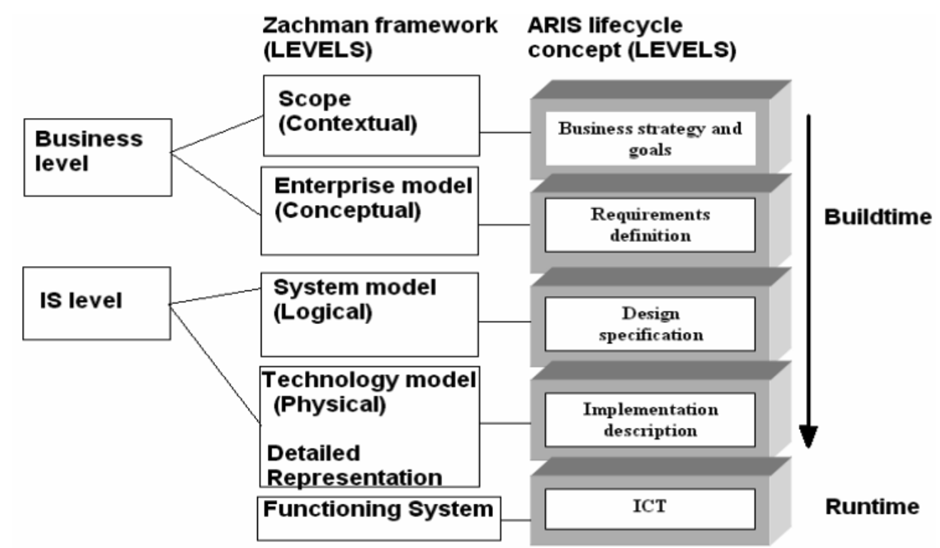

Frameworks are used in the design process to structure the process. Among the most common ones are ARIS [3], Zachman [4, 5] and TOGAF [1].

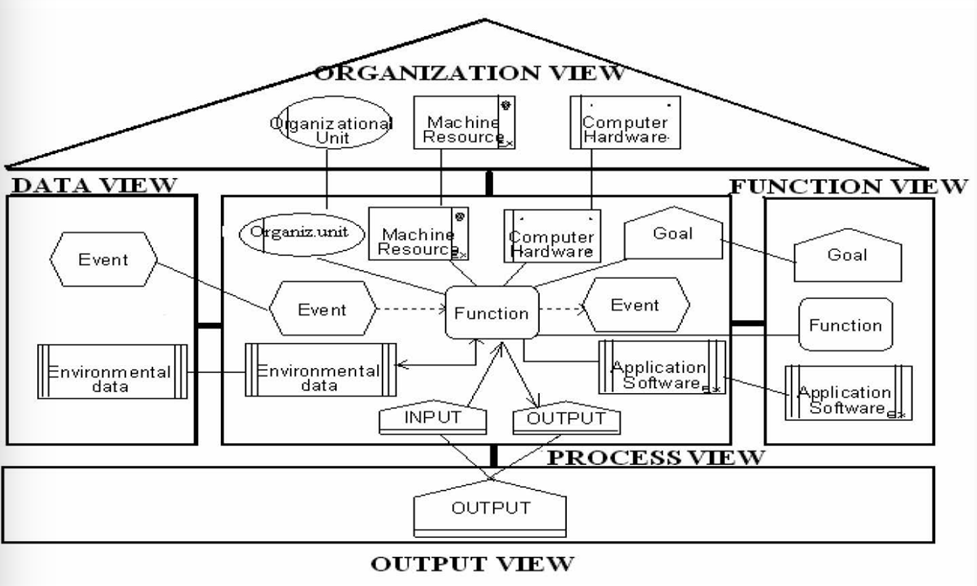

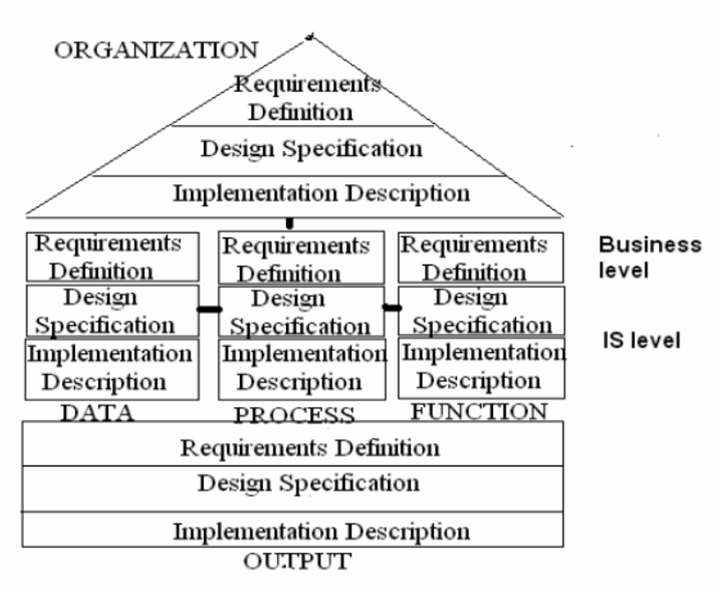

ARIS (Architecture of Integrated Information Systems) is a framework for modeling, analyzing and optimizing business processes and information systems. It offers a layered approach to design and divides the enterprise architecture into five key elements: organizational structure, data, functions, process management, and results. Each of the five aspects is also divided into three levels of detail – conceptual, technical and implementation. This helps structure the transition from a business idea to its technical implementation. The framework helps formalize infrastructure requirements and optimize resource management. It can also be used to graphically model complex systems, which makes it a convenient tool for analyzing and visualizing relationships.

Fig. 1. Model of business processes in ARIS methodology [3]

Fig. 2. Architecture in the ARIS methodology [3]

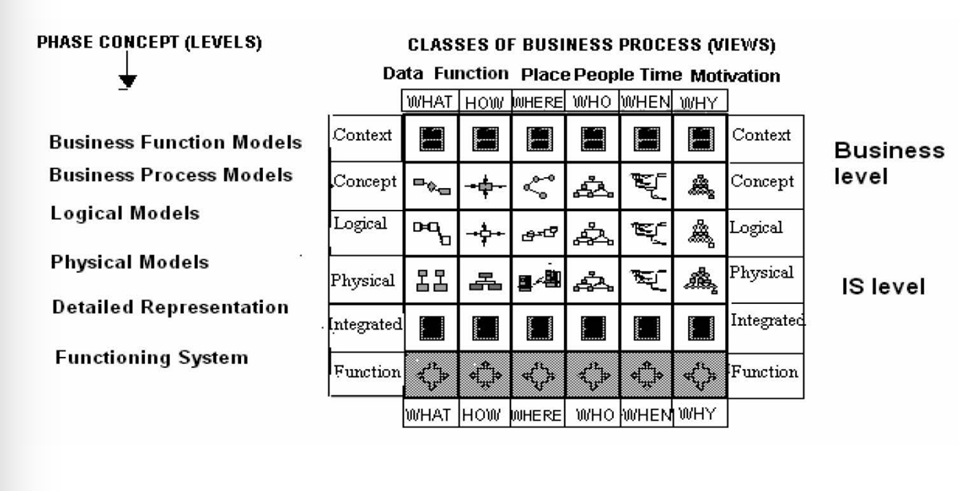

Zachman also represents a multidimensional model of enterprise architecture and allows you to view it from different perspectives: from the strategic level to technical implementation. It is based on a matrix where each row corresponds to a certain level of detail (from conceptual to operational) and each column is responsible for a specific aspect of the system (“What?”, “How?”, “Where?”, “Who?”, “When?”, “Why?”). This approach helps link the strategic goals of the business to IT solutions. For example, when developing a cluster architecture, you can clearly define what data will be processed (“What?”), what technology will be used (“How?”), where it will be deployed (“Where?”), who will be responsible for management (“Who?”), in what timeframe the project will be implemented (“When?”) and why this particular solution is chosen (“Why?”).

Fig. 3. Architecture in Zachman's methodology [3]

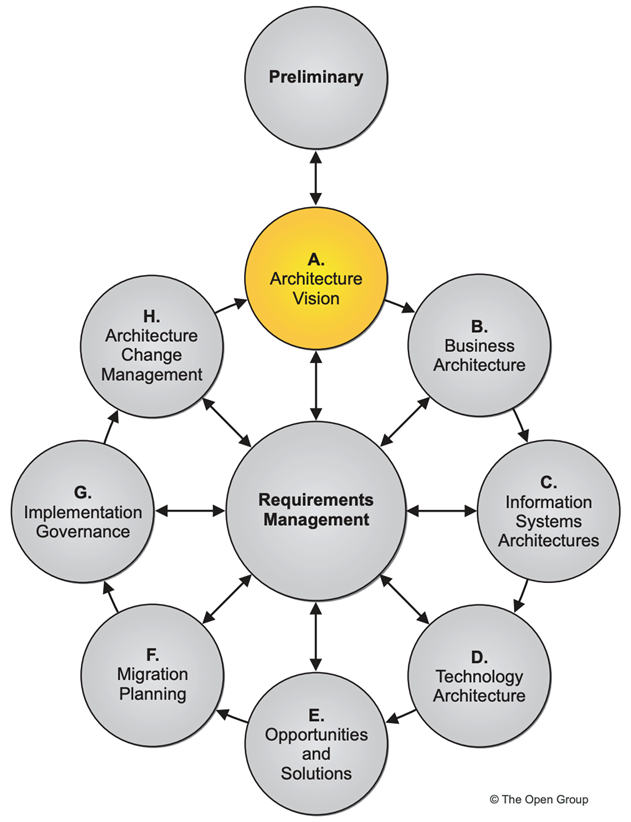

The already mentioned TOGAF framework is one of the most popular ones. TOGAF incorporates the ADM (Architecture Development Method), which describes a step-by-step design process from analyzing business requirements to selecting and implementing technical solutions. ADM is divided into several phases such as creating an architectural vision, developing business architecture, modeling information and technology architectures, migration planning, and change management. One of the key features of TOGAF is its iterative approach, which allows the architecture to adapt to changing business environments. TOGAF also provides for active interaction with stakeholders and analysis of their requirements at early stages.

Fig. 4. Vision of the architecture according to TOGAF methodology [1]

Companies can customize the above frameworks to meet their own needs. For example, the Zachman Framework for Enterprise Architecture was used by VMware to train architects and develop the methodology behind the VMware Certified Design Expert certification. Cisco followed a similar path when it created the Cisco Certified Design Expert certification.

Regardless of the approach chosen, all methodologies boil down to the principle of “from business requirements to specific technical solutions.” Expert judgment is used primarily to analyze data and highlight key points. The design process is iterative, and in theory this means that changes can be made to the original requirements at later stages. In practice, this is avoided.

Fig. 5. Comparison and evaluation of ARIS and Zachman frameworks

If the project does not involve the creation of an entirely new system, but rather the modernization of an existing infrastructure (which is most often the case in practice), gap analysis [6, 7] is applied. Specialists first determine the current state of the infrastructure: they identify the technologies in use, processes, and performance characteristics. Then, they describe the desired state, taking into account new requirements, constraints, and assumptions. Next comes the gap identification phase – an analysis of the discrepancies between the system’s current capabilities and the target state. These gaps may include insufficient hardware capacity, lack of necessary monitoring tools, or failure to meet regulatory standards. Finally, a roadmap for changes is developed, encompassing hardware and software upgrades, process optimization, and the implementation of new solutions.

In any framework, the design process for a virtualization cluster takes into account the requirements, constraints, and assumptions. Risks are also identified and addressed: technical, operational, financial, organizational, security risks, and so on. The entire process is iterative: decisions may influence one another, resulting in changes to the initial conditions. For system control, it is recommended to include a traceability matrix of requirements, constraints, and assumptions in the project documentation.

It should be noted that often not all client requests can be fully met [2, 8]. For example, different departments within a company may have mutually exclusive conditions. Alternatively, some requirements may exceed permissible budgetary, time, or technical limitations. In such cases, the architect must manage the client’s expectations and document any changes to the expected outcomes.

Let us consider several examples of how requirements can influence architecture. For example, the project may require ensuring high fault tolerance for production applications. At the same time, there are no such requirements for the Test & Dev environment. In this case, a reasonable solution would be to deploy the test environment on separate clusters without reserving resources across multiple sites. Additionally, extra isolation between Test & Dev and production applications should be ensured. This approach reduces infrastructure costs.

Another example: one of the applications has additional security requirements due to the sensitive nature of the data being processed. In practice, this often means that all applications in the same virtualization cluster must meet similar standards. Failure to comply with this condition could result in the risk of failing an audit.

In this context, two approaches to solving the problem are possible. The first option is to place all applications in a single cluster with the same level of security, which incurs additional security costs. The second option is separating the applications into distinct clusters, which increases costs for hardware, licenses, and the operation of several isolated environments. The choice depends on the criticality of the data, economic considerations, and long-term architectural requirements.

Conclusion

The design of virtualization clusters requires a comprehensive approach, which includes the analysis of functional and non-functional requirements, taking into account constraints and assumptions, and risk management. In addition to the aforementioned aspects, successful project implementation requires integration with adjacent engineering systems, such as power supply and cooling systems. It is necessary to ensure the required power supply to the server rack and efficient heat dissipation. Furthermore, in the event of a power failure, it is important to provide autonomous operation on uninterruptible power supplies until backup generators are started, as well as scenarios for transferring the load to alternative sites.

Moreover, the architecture of virtualization clusters must take into account network security requirements, such as authentication, certificate, and password management. This ensures reliable protection of information flows and access control. In the case of disaster-resilient geographically distributed systems, special attention should be paid to telecommunications infrastructure, including bandwidth, redundancy levels, and fault tolerance. It is this integrated design approach that enables the creation of fault-tolerant and high-performance virtualization clusters that meet the needs of the business and regulatory requirements.

.png&w=384&q=75)

.png&w=640&q=75)