1. Introduction Network Management

In Network management, for better Quality of Services (QOS), system/network administrators should be always aware of the status of the networking devices called agents, including their CPU loads, memory, storage usage etc. Currently, SNMP (Simple Network Management Protocol) has been widely used in remote monitoring of network devices and hosts. The main advantage of SNMP is simplicity in the design. Also, when the communicated messages between manager and agent entities are low. But low security is the main weakness of SNMP that recently is going to be better in the new versions.

On the other hand, to compensate lack of SNMP, CMIP has designed, and it can be used for lager networks. Object-oriented model will use to design and implement SNMP [1; 2; 3; 4, p. 349-360]. The main advantage of CMIP is its ability to define the techniques to cover manual control, security, and filtering of the management information. The main weakness of CMIP is resource occupation time which is more than SNMP [1; 2; 3; 4, p. 349-360].

2. Cloud Network Management Mobile Agent Model

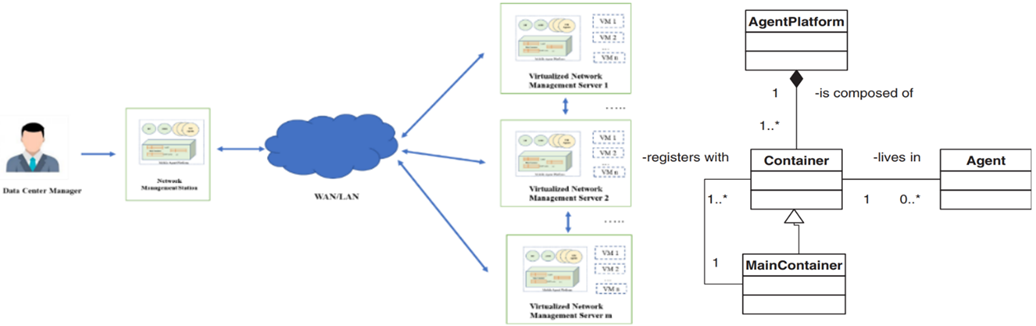

The cloud network management CNMMA Model is based on Mobile Agent technology, network manager station, virtualized server manager relationship and combination of network management protocol (CMIP protocol) and ACL specification (Mobile Accumulative language by FIPA Specification for Mobile Agent). The components of CNMMA Model included basic core components in figure 1 [9; 11, p. 25-36]:

- Mobile Agent Platform.

- Virtualized Network Management Server.

- The Network Management Station (NMS).

- Network Management Mobile Agent (NMMA).

Fig. 1. The CNMMA Model Architecture

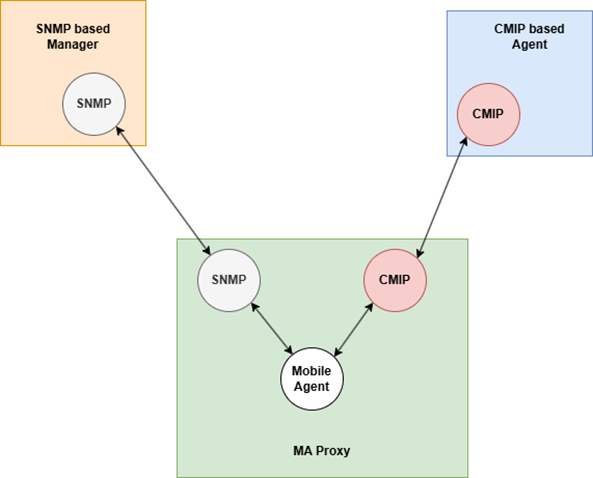

The proposed Mobile Agent (MA) proxy agent provides the emulation of CMIS services by mapping them to the corresponding SNMP messages. It allows management of Internet MIB-II objects by a CMIP manager supporting CMIP network management protocol, and the CMIS services [12].

The MA proxy emulates services such as scoping and filtering, processing of CMIS operations and translation of SNMP traps to CMIS notifications. Figure 1 shows the context dataflow diagram of the CMIP/SNMP proxy agent.

Fig. 2. Mobile Agent proxy for network management

The proxy performs the following functionality:

- Manage connection establishment/release with the CMIP manager.

- Data transfer between the CMIP manager and Internet agent which consists of: transfer of CMIP indication and response protocol data units (PDUs) with the CMIP manager; transfer of SNMP request and response messages with the Internet agent.

- Proxy service emulation functionality such as: CMIS to SNMP mapping; SNMP to CMIS mapping.

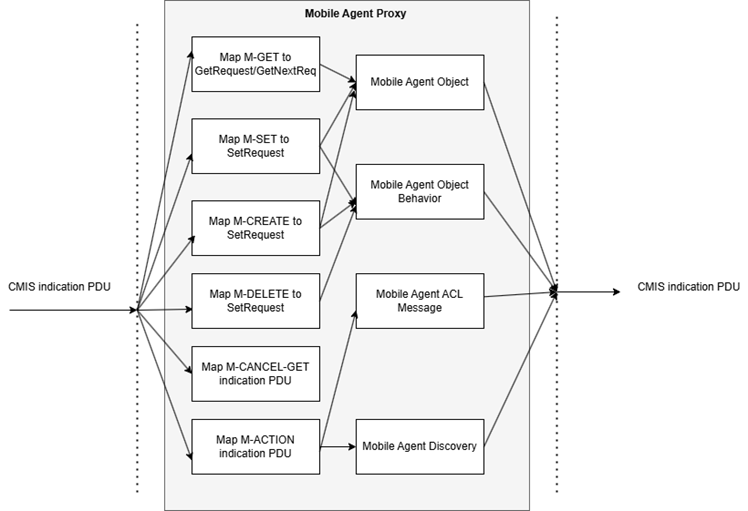

The MA proxy maintains a configuration file in the common data store to preserve the information during the message transfer. Figure 2 shows the data flow diagram for this service mapping [12].

Fig. 3. Service mapping CMIP and SNMP through Mobile Agent

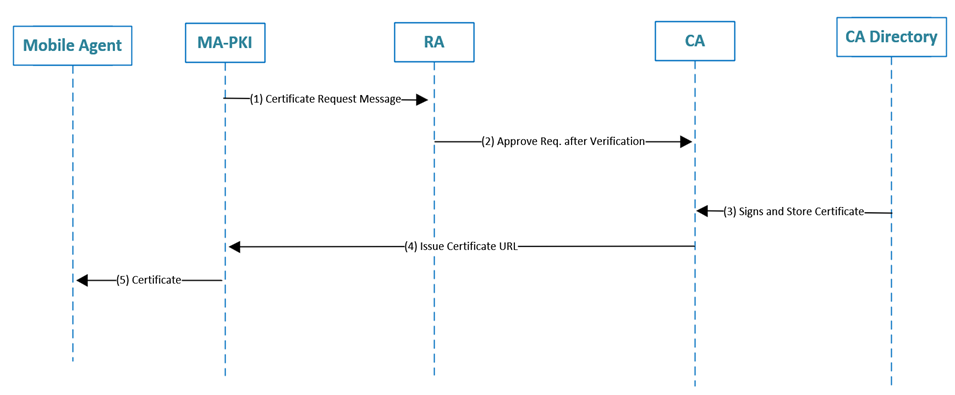

Performance security with MA-PKI: MA-KPI (Mobile Agent - Public Key Infrastructure) is built on the Mobile-PKI (Mobile- PKI) platform and uses elliptical curve cryptography to increase performance and reduce battery consumption (ECC). All cryptographic tasks for the Mobile Agent platform are handled by MA-KPI. In the recommended system, the use of ECC instead of RSA to achieve the same level of security with much less computational cost and smaller keys in figure 3 [10].

Fig. 4. Diagram of CA processing flow in MA-PKI

3. Mobile Agent Distributed Intrusion Detection System Framework

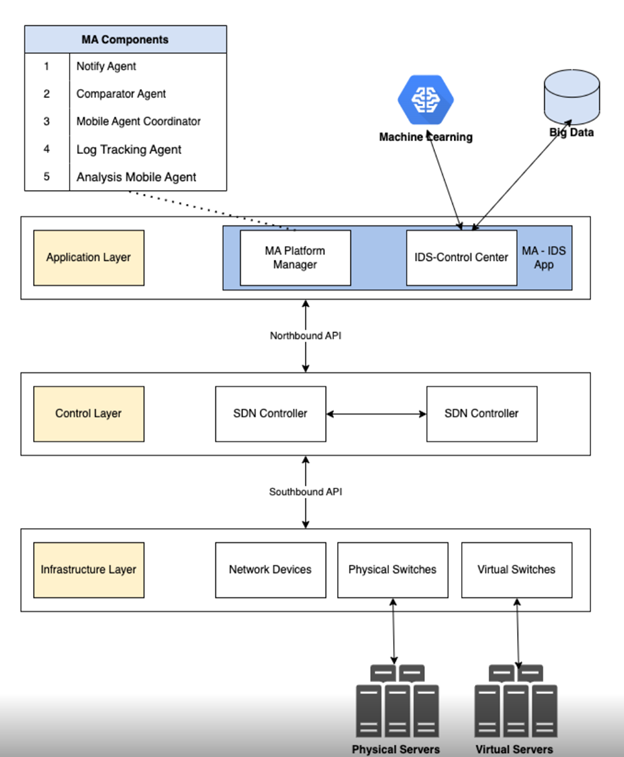

CNMMA Model is also developed as a model for Intrusion Detection System for SDN Network which promise the novel approach for intrusion detection systems compared to existing systems [6, p. 73-79; 7, p. 2581-2589].

Fig. 5. Mobile Agent Distributed Intrusion Detection System Framework (MA-DIDS) in SDN

The system architecture consists of 3 layers on the standard SDN architecture platform consisting of 3 separate layers [8, 9]:

- Infrastructure layer: is the physical and virtualization layer, including physical servers, virtual servers, along with network devices such as physical and virtualized switches. This layer is the platform that executes requests from the upper layer.

- Control layer: is the "brain" of the network, containing the central component of the SDN Controller, which communicates with the infrastructure layer through the Southbound API to configure and control network data flows. At the same time, it provides a northbound interface (Northbound API) so that the application layer can make decisions and send requests down.

- Application layer: is the highest layer, which contains business logic and intelligent applications. For this architecture, this is where the MA-IDS system comes into play.

Detailed analysis of key components

3.1. Mobile Agent (MA) System:

- This is a unique nucleus of architecture, making the system flexible and proactive. Instead of a centralized and passive monitoring system, actors are small programs, capable of moving themselves through network nodes to gather information and execute tasks.

- MA Platform Manager: An environment for managing the lifecycle of agents, including creating, deploying, coordinating, and canceling them.

3.2. Components of Mobile Agent (MA Components):

- Log Tracking Agent (LTA): The main task is to migrate to virtual servers (VMs) to collect system logs, information about network activities, and operating system events. It then reports this data to the IDS Control Center.

- Analysis Mobile Agent: This agent receives raw data from the LTA to perform preliminary analysis, filter out noise, and look for early signs of anomalies.

- Comparator Agent: Compares suspicious activity that has been detected with a database of known intrusion samples. If there is a match, it will confirm this is a known attack.

- Mobile Agent Coordinator: Is the "commander" of other agents. It is responsible for coordinating the operation, sending the agents to the right location, and ensuring that the flow of information processing takes place correctly.

- Notify Agent: When an attack is confirmed (by the Comparator Agent or by the Machine Learning model), this agent will be activated to send an immediate alert to the system administrator through the MA-IDS application.

3.3. IDS Control Center:

- This is the nerve center of the entire security system, where information converges and decision-making.

- Function: Receive all data from MA Platform Manager (reported by agents) and analysis results from Machine Learning.

- MA-IDS App: An interface application for administrators, displaying alerts, system status, and allowing them to interact and investigate problems.

- Status management: The IDS Control Center is responsible for updating and maintaining the status of each virtual machine in the network.

3.4. Database & Big Data

The system needs a large data warehouse for storage and analysis, including multiple databases with distinct functions:

- Intrusion pattern database: This is a database that contains "signatures" or patterns of known attacks. The Notify Agent and Comparator Agent use this data to compare and identify familiar threats.

- Event Database: All system logs and events reported by the Log Tracking Agent (LTA) are stored here as Big Data. This is the input source for the Machine Learning block.

- Virtual Machine Status Database: Updated by the IDS Control Center, this database tracks the status of each virtual machine, which can be in one of three states: normal, compromised, or migrated.

3.5. Machine Learning & Data Mining:

- This module provides intelligence to detect new, previously unknown threats (zero-day attacks) based on unusual behaviors.

- How it works: The machine learning model is applied on a large dataset that includes previously detected attacks, system logs, and data collected by the LTA. From there, the model will "learn" what is the "normal" behavior of the network. Any activity that deviates from this normal state will be considered suspicious.

- Model training: Data scientists use publicly available datasets to train models. One of the most common datasets for anomaly-based intrusion detection is NSL-KDD. This is an improved version of the KDD99 dataset, which includes typical types of attacks such as: DoS (Denial of Service) attack; Probe: Probing attack, scanning the gate; U2R (User to Root): An attack that escalates privileges from a normal user to an administrator; R2L (Remote to Local): A remote access attack on a local machine.

4. Estimate the network cost between Client/Server model and CNMMA model

Network management costs for SNMP-based centralized Client/Server (C/S) model

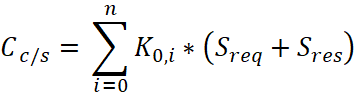

For a network management model based on the Client/Server model, the cost of n probe network devices is:

, (1)

, (1)

In which:

- K0,i: Cost factor of the link from the manager (position 0) to the ith device.

- Requirements: SNMP request packet size.

- Reply: SNMP response packet size.

For each Sreq + Rres the data flowing through the link. If p is the number of network probes performed over a period of time, such as an hour, and then calculate the network management cost for it, then the interval cost is:

, (2)

, (2)

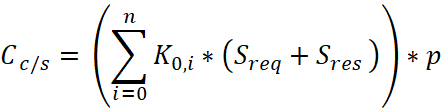

Network management costs for mobile agent management

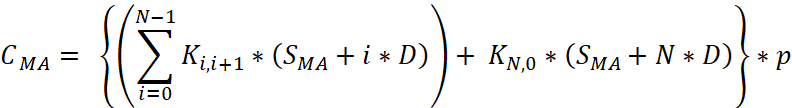

For the network management model based on Mobile Agent, the cost of a single agent migration over the network consisting of N+1 node (with N0 acting as the central management node) is: CMA = K0.1*SMA + K1.2*(SMA+D) + K2.3* (SMA+2*D) + K3.4*(SMA+3*D)+...+ KN-1, N* (SMA+ (N-1)*D) + KN,0*(SMA+N*D).

, (3)

, (3)

In which:

- Ki,j: Cost factor of the link between i and j.

- SMA: The size of the mobile agent.

- D: The size of the information collected by the Mobile Agent at each node.

If p is the number of votes taken during a period of time to manage the network, then the cost of administration for that period is:

, (4)

, (4)

Thus from equations 1 and 2 we have seen that the management cost in the Client/Server Network Management Model is directly proportional to the number of requests and responses made by a device managed or the number of times a particular device's MIB is accessed to retrieve the information to be managed, while according to equations 3 and 4 in the MA-based model, it is directly proportional to the MA agent size as well as the amount of information collected from the mobile agent.

Network management costs for the CNMMA model

Total cost:

![]() , (5)

, (5)

In which:

- CCNMMAD: Cost of network discovery and deployment of managers.

- CCNMMAP: Exploration costs to check the network status at the highest level.

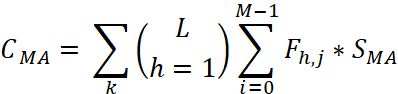

Initial deployment costs from the highest level of management (considered the starting point for network exploration):

, (6)

, (6)

In which:

- SMA: The size of the mobile agent manager.

- L: Number of major managers in the network.

- M: The number of sub-managers in the subdomains of the hth primary manager.

- Fh,j: The total cost factor of the link from the main manager h to the child manager j.

Exploration costs:

, (7)

, (7)

In which:

- MA Request: The size of the message sent from the child manager to the parent manager.

- CQ: Flat model cost for Qth domains.

Flat model costs for Qth domains:

![]() , (8)

, (8)

- MDAs: Dimensions of Mobile Agents.

- RQ: Number of nodes managed in domain Q.

- KQ: The associated cost factor of the Q domain.

If probe p times over a period of time:

![]() , (9)

, (9)

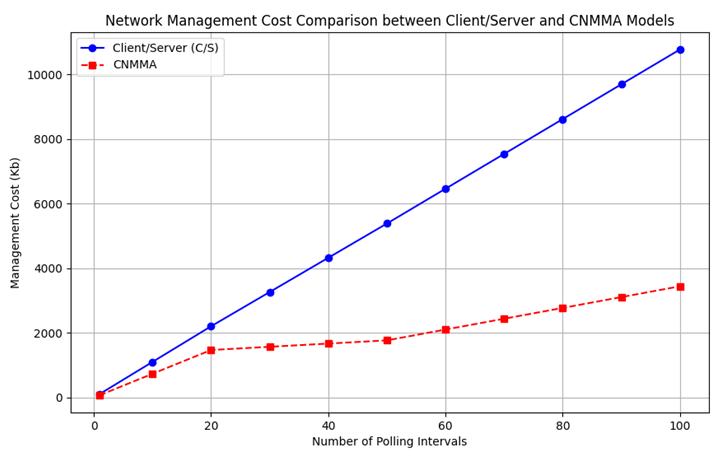

Compare cost effectiveness between Client/Server and CNMMA Model

Table 1

Input parameter setup table to calculate network management costs

Parameter | Meaning | Sampling value | Notes |

N | Number of network devices | 15 (button) | Suppose the central management is Node 3 and there are 5 nodes in the same domain K0.i =1), with 10 nodes in the same domain K0.i =5) |

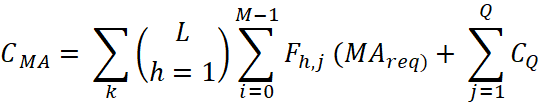

Request | SNMP Request Packet Size | 83 bytes | Getting the packet value via wireshark |

Response | SNMP Response Packet Size | 84 bytes | Getting the packet value via wireshark |

MDAs | Portable Agent Dimensions | 3.2 KB (≈ 4014 bytes) | Get the agent size in the compile file |

SMA | Dimensions of the mobile agent manager | 3.92 KB |

|

MA Request | The size of the notification sent from the child manager to the parent manager | 583 bytes |

|

D | Data collected from each node (MA) | 50 bytes |

|

K0i (C/S) | Associate Cost Factor (C/S) | 1 (same domain), 5 (different domain) |

|

Ki,j (MA) | Associate Cost Factor (MA) | 1 (same domain), 3 (different domain) |

|

p | Number of polls in 1 hour | 10, 20, 30, 40, 50, 60, 70, 80, 90, 100 (times) |

|

Use the wireshark packet analysis tool to determine the size of the request Sreq and the Sres as shown in figure 6.

Fig. 6. Packet trace in Wireshark

Based on the input parameters, table 2 calculates the network costs for the network models.

Table 2

Input parameter setup table to calculate network costs

Number of Polls (p) | Client/Server (KB) | Mobile Agent (KB) |

1 | 110.22 | 73.55 |

10 | 1102.21 | 735.55 |

20 | 2204.41 | 1471.11 |

30 | 3263.55 | 1570.62 |

40 | 4322.7 | 1670.12 |

50 | 5381.84 | 1769.63 |

60 | 6458.21 | 2103.96 |

70 | 7534.57 | 2438.28 |

80 | 8610.94 | 2772.61 |

90 | 9687.3 | 3106.93 |

100 | 10763.67 | 3441.26 |

Based on table 2 on the comparison chart of network management expenditure for the Client/Server and Mobile Agent Models as shown in figure 7.

Fig. 7. Cost Comparison between Client/Server and Mobile Agent

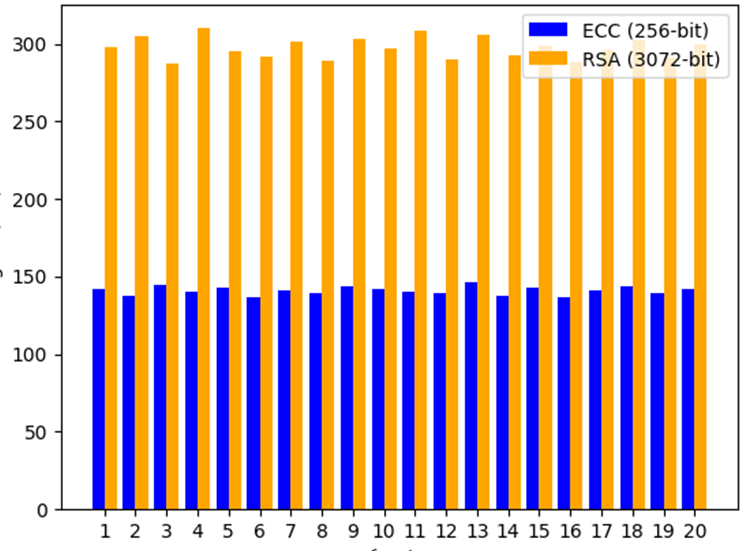

5. Compare size and effectiveness between using ECC and RSA Algorithm in CNMMA Model

Time data (in milliseconds) is collected from 20 run times for each ECC and RSA algorithm setting and the number of 1000 messages sent in CNMMA Model.

Table 3

Numerical table that sends the corresponding run newsletter using ECC and RSA algorithms

Runs | ECC (256-bit) | RSA (3072-bit) |

1 | 142 | 298 |

2 | 138 | 305 |

3 | 145 | 287 |

4 | 140 | 310 |

5 | 143 | 295 |

6 | 137 | 292 |

7 | 141 | 301 |

8 | 139 | 289 |

9 | 144 | 303 |

10 | 142 | 297 |

11 | 140 | 308 |

12 | 139 | 290 |

13 | 146 | 306 |

14 | 138 | 293 |

15 | 143 | 299 |

16 | 137 | 288 |

17 | 141 | 296 |

18 | 144 | 302 |

19 | 139 | 291 |

20 | 142 | 300 |

Notes: The time may fluctuate slightly due to CPU, network, or JVM loads.

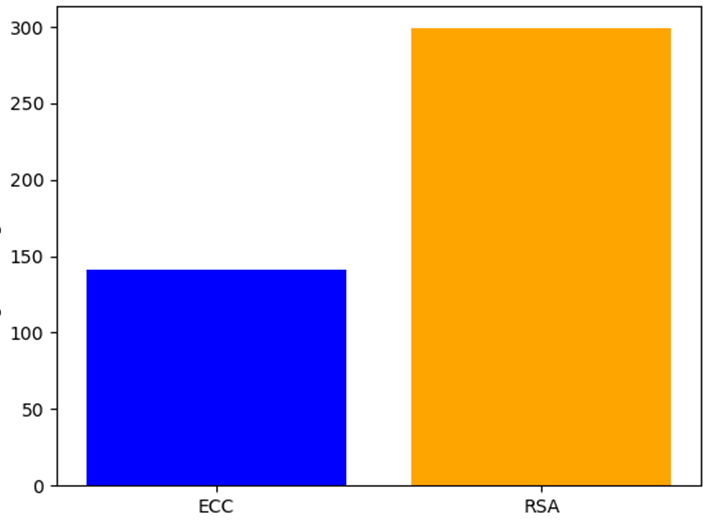

Table 4

Numerical table that sends the corresponding run newsletter using ECC and RSA algorithms

Algorithm | Average (ms) | Standard Deviation (ms) | Relative speed | Conclusion |

ECC (256-bit) | 140.95 | 2.73 | Faster than 2.12 | Optimized for Mobile Agent |

RSA (3072-bit) | 298.55 | 6.78 | Slower | Suitable for high security |

Performance comparison chart between Mobile Agent platform transmission encryption using ECC and RSA algorithms

Graph comparing the performance of the Mobile Agent platform transmission encryption using ECC and RSA algorithms as shown in figure 8.

Fig. 8. Chart comparing ECC and RSA performance in a Mobile Agent environment

Average comparison time of ECC and RSA in figure 9.

Fig. 9. Average Time Between ECC and RSA

Performance Comparison:

- Ratio:

.

. - ECC was about 2.12 times faster than RSA in testing.

- Through 20 tests, ECC has demonstrated superior performance in terms of speed and stability compared to RSA. RSA is only suitable in applications that require strong encryption with little communication. Meanwhile, ECC is suitable for distributed environments where performance and bandwidth are important.

6. Comparative Testing Review Between Baseline IDS and MA-DIDS

Test Environment

The Baseline IDS and MA-DIDS test environments are configured as follows:

- CPU: 2 CPU Intel Xeon 3963 v3.

- RAM: 128 GB.

- JVM: Java version 21.0.9.

- OS: Window Server 2019.

- Network LAN: 1 Gbps.

- Test attack techniques: port scanning, SYN flood/small DDoS, brute-force SSH, ARP/ARP spoof scanning.

Experimental sets

Experiment 1: Evaluate detection accuracy (True Positive Rate & False Positive Rate).

- Objective: Determine the accuracy detection capability and false alarm rate of the two Baseline IDS and MA-DIDS systems.

- How to install: Each system is run 30 times, each time is 5 seconds. Attack and normal data are randomly mixed to ensure 95% reliability. Collected indicators: True Positive Rate (TPR), False Positive Rate (FPR).

Table 5

Comparison table of data evaluating the accuracy of intrusion detection between Baseline IDS and MA-DIDS

Indices | Baseline | MA-DIDS | Improve |

True Positive Rate | 99.2% | 99.3% | 0.2% |

False Positive Rate | 41.7% | 15.6% | -62.7% |

- Analysis: MA-DIDS almost kept the same TPR but sharply reduced the FPR to 62.7%. This proves that the MA-DIDS system has better interference filtering, limiting false alarms.

- Conclusion: MA-DIDS is exceptionally effective in terms of accuracy, especially in reducing false alarms compared to Baseline.

Experiment 2: Assessment of Classification Reliability (Precision & F1-Score)

- Objective: Compare the correct classification ability and balance between the accuracy and coverage of the two models.

- How to set: Use the same test dataset with 30 samples. Measure indicators: Precision, F1-Score (average 30 times).

Table 6

Comparison of Classification Reliability Evaluation Tables between Baseline IDS and MA-DIDS

Indices | Baseline | MA-DIDS | Improve |

Precision | 85.4% | 95.6% | 11.8% |

F1-Score | 90.7% | 97.1% | 7.0% |

- Analysis: MA-DIDS significantly improved Precision (+11.8%), showing fewer false alarms. The F1-Score increased by 7%, representing a balance between accuracy and coverage.

- Conclusion: The MA-DIDS system is more reliable in properly classifying attacks and reducing warning errors.

Experiment 3: Evaluate System Performance (Time, CPU, Memory, Thread)

- Objective: Compare the resource consumption and response speed of the two systems.

- How to install: Same hardware configuration, each test runs 5 seconds × 30 times. Measure metrics: detection time, CPU usage, Memory, and Thread count.

Table 7

Performance evaluation comparison table between Baseline IDS and MA-DIDS

Indices | Baseline | MA-DIDS | Spreads |

Detection Time | 16.6 ms | 40.8 ms | +146.3% |

Memory Usage | 28.0% | 38.5% | +37.4% |

CPU Usage | 46.9% | 92.5% | +97.1% |

Thread Count | 5.5 | 27.9 | +411.6% |

- Analysis: MA-DIDS consumes more resources due to the distributed mobile agent mechanism (due to the overhead of the platform). Detection time is slower due to multiple cooperative processing steps between agents.

- Conclusion: MA-DIDS is suitable for resource-intensive environments and requires high accuracy and deep integration while Baseline IDS is still responsive for resource-limited systems.

Conclusions

This paper presents the integration of popular Cloud Network Management at an abstract level, using lab experiments without full implementation and demonstrate the effectiveness of enhancing performance and cost of network performance to traditional Client/Server model and compare the effectiveness between Baseline IDS and proposed MA-DIDS. In future research, we aim to provide a more detailed design for each component of the model and develop its full functionality. Moving forward, we will also analyze the use of monitoring traffic flows within the cloud and conduct pilot testing in a production environment.

.png&w=384&q=75)

.png&w=640&q=75)