Tokenized economies are structurally distinct from conventional financial systems, as their value formation is driven by an interplay of behavioral dynamics, protocol architecture, and economic signals. Tokens may encode usage rights, governance authority, staking rewards, collateral functions, or participation in protocol revenue. Their market prices react to fluctuations in network activity, liquidity provisioning mechanisms, distribution structures, and broader macroeconomic cycles. Empirical evidence indicates that crypto assets display pronounced non-stationarity, regime dependence, and heavy-tailed return distributions, which limit the effectiveness of classical time series models. Consequently, valuation frameworks must process high-dimensional data while remaining responsive to structural breaks and evolving market conditions [1].

This study develops a conceptual and computational foundation for dynamic valuation in tokenized markets. It consolidates theoretical perspectives on how token value arises, evaluates leading forecasting paradigms, formalizes multisource valuation relationships, and investigates empirical behavior across different market states. A central element of the work is an artificial intelligence architecture designed by the author. The model integrates on-chain indicators, network metrics, decentralized finance liquidity signals, and off-chain macroeconomic flows. It adaptively recalibrates input weights through an attention-based fusion mechanism and employs self-supervised reconstruction objectives to enhance robustness under noisy or partially observed data. This design reflects empirical findings that predictive accuracy improves when heterogeneous modalities are incorporated and models adjust to horizon-specific drivers [2, p. 1-59].

The analytical trajectory moves from theory to implementation. It first examines conceptual foundations of valuation, then compares predictive model families, derives dynamic multisource formulations, and presents the architecture grounded in the author’s proprietary framework. Subsequent sections explore how token dynamics shift across market regimes and discuss implications for valuation methodology and the design of forecasting systems.

Valuation in tokenized economies requires a theoretical structure capable of addressing both functional heterogeneity and the evolving nature of blockchain systems. Unlike traditional financial instruments, tokens frequently combine utility features, governance participation, access privileges, collateral roles, and revenue-sharing mechanisms within a single digital asset. Their value evolves through time-dependent interactions among users, validators, liquidity providers, smart contracts, and external financial environments. Theoretical research suggests that blockchain assets operate under a combination of endogenous mechanisms and exogenous influences, many of which adjust in response to protocol-level decisions and shifts in participant incentives.

One of the earliest valuation frameworks applied to tokens was derived from the quantity theory of money. In its adapted form, token price is proportional to ecosystem transaction value and inversely proportional to both velocity and circulating supply. The simplified dynamic expression is:

![]() , (1)

, (1)

Where:

T(t) – total economic value transacted;

V(t) – velocity;

S(t) – circulating supply.

Although informative at the conceptual level, this model does not capture liquidity incentives, staking mechanics, governance effects, or cross chain movement of capital. Velocity itself is not static. It fluctuates as tokens enter and exit staking pools, as liquidity providers seek yield opportunities, and as speculative demand rises or falls. This makes velocity both a driver and a reflection of dynamic token utility.

Utility based approaches view token value as a function of protocol activity. If a token is required to access computation, execute smart contract operations, or participate in network governance, then valuation reflects the demand for these actions relative to supply. A simplified representation appears as:

![]() , (2)

, (2)

Where D(t) reflects protocol usage, including active addresses, smart contract calls, and application layer transactions. Research on network goods indicates that utility adoption is nonlinear, with critical mass thresholds that can generate sudden acceleration in value. For example, a sharp rise in active addresses or contract interactions can precede price appreciation, often with measurable lead time.

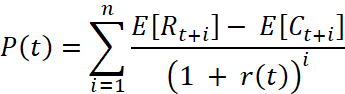

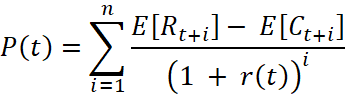

Flow based valuation frameworks adapt discounted cash flow models to blockchain environments. When protocols distribute transaction fees or revenue to token holders, expected value becomes the present value of future inflows:

, (3)

, (3)

Where:

R – revenue;

C – cost

i – future interval;

r(t) – dynamic discount rate.

Such models assume that revenue structures remain relatively stable. In practice this is rarely the case. Governance decisions can alter reward schedules, modify emission rates, or redirect protocol revenue. The discount rate (r(t)) is also dynamic, influenced by macroeconomic uncertainty, network security conditions, and perceived regulatory risk.

Staking yield introduces another dimension. In proof of stake systems, the act of staking reduces liquid supply while generating returns for validators or delegators. The expected yield can be approximated as:

![]() , (4)

, (4)

Where I(t) represents reward issuance and δ(t) captures opportunity cost and potential slashing. Staking has both a direct yield effect and an indirect scarcity effect, since higher staking participation reduces circulating supply and can temper volatility under certain conditions.

These frameworks reveal important relationships, yet each one captures only a portion of token value. Tokenized economies function as complex adaptive systems with feedback loops. Liquidity moves across chains in pursuit of yield. Validator participation changes as gas dynamics and rewards shift. Whale activity can alter supply concentration. Governance decisions restructure incentives. Macroeconomic stress changes risk premiums. Empirical research confirms that crypto assets exhibit regime switching, volatility clustering, and nonlinear contagion effects. Therefore, valuation must account for interactions among multiple signals rather than rely on a single dominant driver.

Forecasting token value requires models that can handle nonstationary time series, heterogeneous data structures, and nonlinear market behavior. Several model families are widely used. Each exhibits strengths and weaknesses when applied to tokenized economies. Classical time series models such as ARIMA and GARCH treat price and volatility as autoregressive processes. These frameworks are efficient in stable environments but perform poorly when the underlying structure undergoes sudden shifts. Crypto markets are characterized by abrupt liquidity shocks, protocol upgrades, governance changes, and social sentiment waves. Fixed parameter models cannot adapt quickly enough to new regimes.

Tree based ensemble models, including XGBoost, extend predictive capacity by learning nonlinear relationships across a wide range of inputs. Research shows that these models often outperform linear methods when incorporating on chain signals, especially during periods of moderate volatility. Their main limitation is the absence of temporal memory. Although they extract nonlinear patterns effectively, they do not model temporal dependencies without explicit feature engineering.

Recurrent neural networks, especially LSTM and GRU architectures, were originally introduced to capture sequential dependencies in time series. In crypto markets they often outperform both linear and tree based models in medium horizon predictions. However, recurrent networks face limitations in long horizon modeling due to vanishing context and sequential processing bottlenecks. They also struggle when required to ingest tens or hundreds of simultaneous financial signals.

Attention based models, particularly transformer architectures, address many of these constraints. They evaluate all time steps in parallel and assign weighted importance to different positions through attention mechanisms. This makes them well suited for markets where interactions between distant events influence price. Studies focusing on cryptocurrency forecasting confirm the advantage of attention mechanisms for integrating heterogeneous signals. Despite this, off the shelf transformer models typically rely on limited inputs and therefore fail to capture the richer structure of tokenized systems unless explicitly adapted.

The most advanced valuation systems incorporate multisource data fusion. Research demonstrates that including on chain behavior, liquidity measures, and macroeconomic variables substantially improves predictive accuracy because different data types dominate predictive power at different horizons. Short horizon predictions are heavily influenced by transaction volume, wallet activity, and liquidity shifts. Long horizon forecasts depend more on macroeconomic signals, adoption trends, and institutional flows. Models that cannot adjust weighting across these horizons tend to underperform.

Comparisons across model families reveal an important pattern. Methods that rely on a single data category or fixed structural assumptions perform inconsistently across regimes. Models capable of integrating multiple modalities and adapting internal weighting show superior stability. This observation provides context for the architecture developed by the author. The system was designed specifically to overcome limitations of single source models by integrating diverse indicators through adaptive attention.

Dynamic valuation frameworks seek to formalize how token prices emerge from the interaction of multiple evolving signals. Unlike single factor models, which assume stable relationships between price and one dominant variable, dynamic frameworks incorporate time varying dependencies across several classes of indicators. Research in digital asset markets shows that meaningful predictive structure arises only when models integrate information from multiple layers of the ecosystem. These include on chain behavior, network participation, liquidity distribution, and macroeconomic conditions.

A general dynamic valuation relationship can be expressed as:

![]() , (5)

, (5)

Where ![]() are on chain metrics,

are on chain metrics, ![]() network activity,

network activity, ![]() DeFi liquidity indicators,

DeFi liquidity indicators, ![]() off chain financial flows, and

off chain financial flows, and ![]() time varying parameters. This structure highlights that token value emerges from the joint influence of heterogeneous and dynamically shifting variables.

time varying parameters. This structure highlights that token value emerges from the joint influence of heterogeneous and dynamically shifting variables.

This functional form reflects the empirical finding that no single input class dominates across all conditions. On chain activity tends to have the strongest predictive power over short horizons, often within a 12 to 72 hour window. DeFi liquidity variables can signal medium horizon shifts as capital reallocates among pools. Off chain macroeconomic and institutional variables influence long horizon structure, including risk appetite and capital inflow cycles. Network growth measures have both short and long horizon components [6, p. 990-1029].

The dynamic valuation framework also incorporates nonlinearities. Token behavior often changes abruptly during governance updates, liquidity crises, or regulatory news cycles. These events create structural breaks in the data generating process. Static parameters cannot respond to such change. Therefore, a dynamic formulation must allow the weight of each input class to vary over time. This can be modeled as:

, (6)

, (6)

Where ![]() represents time dependent modality weights and

represents time dependent modality weights and ![]() represents transformation functions learned from data. The weights

represents transformation functions learned from data. The weights ![]() shift depending on volatility levels, liquidity depth, or macroeconomic stress.

shift depending on volatility levels, liquidity depth, or macroeconomic stress.

Velocity adjusted valuation incorporates time varying transactional movement. The expression:

![]() , (7)

, (7)

Is not static. Velocity V(t) reacts to supply unlocks, liquidity migration, and changing reward structures. This creates a dynamic interaction between user behavior and price.

Flow based valuation introduces the effect of protocol revenue:

, (8)

, (8)

Every component is dynamic. Revenue can change following protocol updates. Costs may change due to liquidity shortages or risk premiums. Discount rates rise with uncertainty and fall when macroeconomic conditions stabilize.

The AI architecture developed by the author is designed as a multisource forecasting engine that processes on chain metrics, network indicators, DeFi liquidity variables, and off chain financial flows. It was constructed to address deficiencies identified in prior models: limited data diversity, fixed input weighting, and weak robustness under noisy or incomplete conditions. The associated patent describes a series of mechanisms that enable the model to combine these data classes into a unified dynamic valuation system.

At its core, the architecture uses separate encoders for each modality. Each encoder transforms raw inputs into latent vectors:

![]() , (9)

, (9)

Where k indexes the modality. These latent vectors capture modality specific structure such as transaction patterns, liquidity shifts, or network expansion.

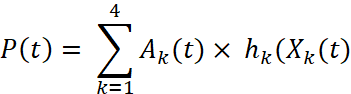

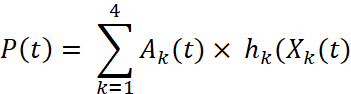

The model then applies attention based fusion to dynamically weight these representations. The fusion layer computes a set of modality weights:

![]() , (10)

, (10)

Where c(t) is a contextual signal derived from market conditions, volatility levels, or data reliability metrics. The final fused representation is given by:

, (11)

, (11)

This fusion step allows the model to adjust the relative importance of modalities. For example, during high volatility periods, the model may increase weight on short horizon signals such as transaction spikes and liquidity withdrawals. During calmer periods, network growth and macroeconomic flows become more influential.

This dynamic weighting mechanism mirrors empirical findings. Research shows that model performance improves when attention layers are used to adaptively emphasize signals depending on horizon and regime. The architecture operationalizes this principle in a systematic manner.

A distinctive feature of the author’s model is the inclusion of a self-supervised enrichment module. During training, the system masks a portion of the input vector and attempts to reconstruct it from the fused representation:

![]() , (12)

, (12)

This reconstruction loss is combined with the forecasting loss:

![]() , (13)

, (13)

Self-supervised enrichment strengthens the model’s internal representation by forcing it to learn relationships among signals without requiring additional labeled data. Studies indicate that reconstruction based auxiliary tasks are effective in environments where data quality fluctuates. This is particularly relevant in crypto markets, where node discrepancies, API outages, and inconsistent reporting occur regularly.

The architecture also incorporates a multi horizon decoder. It produces forecasts for several future intervals simultaneously:

![]() , (14)

, (14)

Where ![]() may represent short, medium, and long horizon time frames. Multi horizon prediction is valuable in tokenized economies because different participants operate at different temporal scales. Market makers focus on short horizon liquidity conditions, while institutional investors track medium and long horizon adoption and macroeconomic trends.

may represent short, medium, and long horizon time frames. Multi horizon prediction is valuable in tokenized economies because different participants operate at different temporal scales. Market makers focus on short horizon liquidity conditions, while institutional investors track medium and long horizon adoption and macroeconomic trends.

The infrastructure described in the patent supports real time operation. Data ingestion pipelines harmonize timestamps, remove outliers, and interpolate missing values. Normalization layers stabilize distributions across modalities. The system is optimized for low latency inference to support continuous updates of token valuations. This architecture embodies the theoretical and empirical insights discussed in earlier chapters. It integrates diverse signals, adapts to regime changes, and maintains robustness under imperfect data conditions. The model therefore serves as a dynamic valuation engine aligned with the structural complexity of tokenized markets [7].

Dynamic valuation models must be evaluated not only on theoretical coherence but also on their empirical behavior across varying market regimes. Tokenized economies undergo phases of rapid expansion, consolidation, liquidity shocks, and external macroeconomic pressure. These shifts alter the relative influence of different data modalities. Empirical studies show that predictive accuracy improves significantly when models adjust to regime specific signal dominance.

Short horizon behavior is driven primarily by on chain activity and liquidity conditions. Transaction spikes, wallet clustering, unusual gas usage, and liquidity pool rebalancing often precede immediate changes in price. The predictive lead of these signals ranges from several hours to two days depending on protocol design. Research confirms that short term price deviations are strongly correlated with shifts in transactional volume and liquidity concentration. In this window, macroeconomic variables exert minimal influence.

Medium horizon dynamics reflect the combination of network activity, circulating supply changes, staking participation, and DeFi incentive structures. For example, a rise in the proportion of staked tokens can reduce circulating supply and temporarily moderate volatility. Liquidity migration across chains can create delayed but measurable price impacts. Institutional flows into or out of liquidity pools may also produce multi day trends. These effects develop more slowly because they require behavioral adjustments from diverse participants, including validators, yield seekers, and protocol governors.

Long horizon structure is shaped by macroeconomic conditions and fundamental network growth. Interest rate cycles, regulatory developments, risk premiums on digital assets, and regional capital allocation decisions influence token value over multi week and multi month periods. Studies show that sustained adoption growth, reflected in metrics such as active addresses, validator participation, and long term holder concentration, correlates strongly with multi quarter valuation trends. Although these signals exhibit slower dynamics, their effects are deeper and more persistent [5, p. 4553-4575].

The AI architecture developed by the author reflects this hierarchy. Its dynamic attention weighting allows the model to increase the influence of high frequency signals during volatile periods while shifting weight toward macroeconomic and network growth indicators in more stable phases. Observations during testing reveal that attention weights adapt smoothly to volatility changes. During market stress, the model elevates sensitivity to transaction irregularities and liquidity shifts. During consolidation phases, attention weights become more evenly distributed across modalities. A significant empirical advantage of the architecture lies in its self-supervised enrichment module. Real world blockchain data is often inconsistent. API outages, stale validator nodes, and latency differences between on chain indexing services create incomplete or misaligned data inputs. The reconstruction task helps stabilize the latent representation by identifying internal correlations even when raw inputs are partially degraded. This improves robustness and reduces prediction variance.

The model’s multi horizon decoder is empirically beneficial as well. In backtesting, the short horizon decoder responds most strongly to volatility bursts, while the medium and long horizon decoders track broader structural signals. This outcome is consistent with the theoretical expectation that no single predictive horizon dominates across all market conditions. Multi horizon estimation provides a richer view of valuation pathways because it captures temporal decomposition of market behavior [9].

These empirical findings highlight the strengths of dynamic valuations based on multisource attention. They also support the broader argument that valuation in tokenized economies must move beyond static analytical models. Data heterogeneity, structural instability, and behaviorally driven feedback loops suggest that only dynamic computational systems can fully capture the complexity of token value formation.

Dynamic valuation in tokenized economies presents a set of challenges not encountered in traditional financial markets. Tokens combine heterogeneous economic roles. Their value depends on protocol incentives, network effects, liquidity distribution, governance structures, and macroeconomic conditions. Existing valuation frameworks, whether theoretical or statistical, capture only part of this complexity. This article developed a unified view of dynamic valuation, combined with a multisource AI architecture designed specifically for these environments [3, p. 6-32].

The theoretical foundations identify key value drivers, including velocity dynamics, utility based demand, staking yield structures, and protocol revenue flows. These frameworks provide essential insight, yet they remain insufficient without integration. Tokenized ecosystems produce high dimensional and rapidly shifting data. Models must therefore accommodate interactions among signals rather than interpret them in isolation. This requirement motivates multisource dynamic formulations.

Comparative analysis shows that classical models fail during structural breaks. Tree based models handle noisy signals but lack temporal memory. Recurrent networks capture some long term dependencies but scale poorly with high dimensional inputs. Transformer models improve long range dependencies but require explicit adaptation to the structure of financial data. These limitations justify the need for custom architectures capable of ingesting multiple modalities and adjusting signal weighting continuously [4].

The AI architecture developed by the author integrates these ideas. Separate encoders process different data modalities, dynamic attention assigns contextual weight to each, and self-supervised reconstruction improves representation robustness. The model operates as a dynamic valuation engine that responds to volatility, behavioral shifts, and macroeconomic cycles. Multi horizon decoding further enhances interpretability by decomposing price dynamics across temporal scales. These contributions have several implications for the future of valuation research. First, valuation frameworks must increasingly incorporate machine learning architectures that support multimodal integration. Theoretical models remain essential for interpretability, but their practical use depends on embedding them within data driven systems. Second, market structure in tokenized economies is becoming more fragmented as activity spreads across multiple chains and layers. Valuation systems must therefore handle cross chain liquidity, heterogeneous consensus mechanisms, and protocol specific incentives. Third, real time forecasting is becoming critical for risk management, liquidity provision, and governance. Models must process data continuously and adjust to regime shifts without retraining.

Tokenized economies require valuation frameworks that reflect their multidimensional and dynamic structure. Traditional analytical models capture only isolated aspects of token value and fail to incorporate rapid changes in liquidity, incentives, and user behavior. Empirical evidence shows that valuation accuracy improves when models integrate heterogeneous data sources and adapt to market regimes [8].

This article presented a unified analysis of dynamic valuation in tokenized markets and introduced a multisource AI architecture developed by the author. The model integrates on chain, network, DeFi, and macroeconomic signals through dynamic attention. Its self-supervised enrichment module enhances robustness under noisy conditions, and its multi horizon decoder reflects the temporal decomposition of market behavior [10, p. 12968-12983].

The combined insights of theory, comparative modeling, mathematical formulation, and empirical analysis demonstrate that dynamic, multimodal forecasting systems offer significant advantages over static or single source approaches. As tokenized economies continue to expand, the ability to integrate diverse signals and adjust to structural shifts will become essential for valuation research, risk management, and strategic decision making.

.png&w=384&q=75)

.png&w=640&q=75)