Introduction

Currently, robots in current robot teams are not entirely capable of doing the following tasks: simulating a single robot's logical behavior, having a robot make a decision based on a group task, and having a robot assess the task's outcome. There are practically no works where the authors describe the task in its entirety: from formulation to implementation and evaluation of results. Many publications in these areas are primarily theoretical in nature [1, p. 60-71; 2, p. 1019-1033; 3, p. 574-614; 4, p. 21; 5, p. 61]. The existing examples of online robot development systems are narrowly focused on the manufacturer of software or hardware for computer devices [6, 7]. The findings reported in [8; 9, p. 21; 10; 11, p. 307-321; 12, p. 1-14; 13, p. 1-14] are the most closely related to the topic of the author's investigation.

The suggested modeling approach enables the adjustment of various kinds of robots and their group behaviors while producing sufficient evaluations of the completed group activity. The system for simulating the intentional collective behavior of a group of robots is based on the multi-agent simulation paradigm and was implemented in the modeling NetLogo software [15]. During the simulations the different behavior algorithms were tested.

The study's focus is on mobile robot models with cooperative capabilities. The interaction among group members is crucial as it establishes a continuous feedback loop. The objective function is to guide the behavior of the entire robot group within the parameters of a collaborative job, rather than just one robot. This is an extension of the previous study where robots were not interacting during their work [16].

Model composition

The multi-agent simulation model consists of a central control module (CCM), intelligent robots, a model of team interaction, and a model of the external environment.

External environment model

For an intelligent robot to function in unpredictable environments, it needs to have an internal model of the outside world.

The robot's model of its external environment consists of a digital map of the region, algorithms for analyzing data from multiple information sources (sensors, cameras, GPS, etc.) to get the location of other robots and obstructions to movement more clear, and other features. The first set of information that the robot's intelligent navigation system receives comes from the external environment model.

The architecture of an intelligent robot can be represented as follows: intelligent robot → intelligent navigation system + movement subsystem → sensory perception subsystem (gathering knowledge about the external environment). Data from the sensory subsystem and a knowledge base serve as the foundation for the external environment model.

The memory of the robot must hold data regarding normal responses to information signals from sensors and control devices, as well as data regarding the condition of actuators and resources that are available (such as other robots, time that is available, etc). Algorithms for converting input data into actuator control signals and a filter system that permits the allocation of data important to the robot, must be included in the memory.

Intelligent robot

The robot receives and integrates information from three sources:

- from the human user in the form of target designations;

- from the sensors of the sensor system;

- from its own knowledge base.

The robot's operating environment could be unknown beforehand or could change suddenly while it's working. The properties of the external environment, such as soil composition, air temperature, wind direction and speed, etc., may be unknown to an intelligent robot.

Operational processing of current information acquired from sensors and other sources must make up for the lack of a priori information in order to guarantee logical target behavior of the robot under unpredictable settings.

Two guiding principles underpin the development of an adaptable control system for an intelligent robot with multiple operating modes:

- "primary" navigation ensures the robot moves toward the target in accordance with human directions and instructions;

- "refined" navigation depends on up-to-date environmental data obtained from the sensor system.

Consequently, a fuzzy control subsystem is added to the standard classical control scheme to create a hierarchical two-loop control system. This method considers changes in knowledge from precise to uncertain and vice versa.

Model of interaction in a team of intelligent robots

Within a multi-agent simulation model, a collection of robots is managed by a central control module that houses a shared database, an external environment model, and the group's knowledge base.

The shared database contains an electronic terrain map (ETM), the number of robots, their tactical and technical characteristics (TTC), and the area's size for the group of robots to operate in.

The knowledge base of a group of robots consists of routes and algorithms for creating them, algorithms for determining whether obstacles are present and can be avoided, algorithms for resolving issues with crossing routes (meeting robots), an algorithm for deciding how to move (choosing a speed, direction, and time interval), algorithms for managing actuators, algorithms for evaluating the condition of actuators, and algorithms for deciding whether to reach the destination at the end of the route (reaching the goal).

Simulation scenario

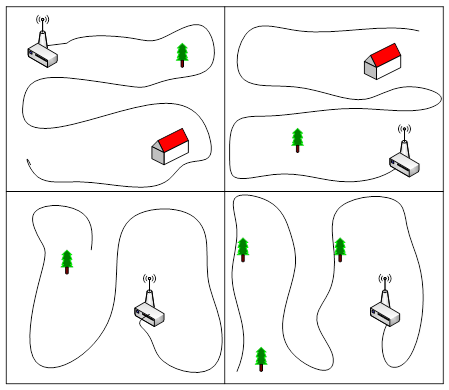

The following scenario is suggested in order to examine the goal-oriented group behavior of a group of robots using a multi-agent simulation model [17, p. 195-240; 18, p. 29-48]: A central control system (CCS) housed on the main robot controls N research robots that are dispersed throughout a portion of land. The primary robot needs to map out its path through a specific area, and the research robots work on its main goal. The ECM specifies the territory's dimensions as a rectangle or square. The research robots are distributed among areas of responsibility and given trajectories for territory exploration by algorithms for route construction that rely on data from the environmental model (EM) (fig. 1).

Fig. 1 Distribution of robotic explorers by areas of responsibility for exploring the territory

A more accurate map of the landscape for determining the primary robot's path is the end product of the preparatory phase. Every robot transmits the following kind of codegram to the CCU while it is working.

The group robots' positions and states, the existence of obstacles, and each robot's completion of the task are all tracked by the control system, which uses algorithms derived from the knowledge base. Each robot has an on-board Central Processing Unit (CPU) which uses data from a collection of its sensors to create the codegram that is displayed in table 1.

Table 1

The codogram from the on-board robot CPU

Robot number | Coordinates | Obstacle flag | Battery reserve | Fault |

N | X,Y | 0-1 | 1-100 | 0-1 |

The route is made up of several linked segments, each of which has a beginning and a finishing point. Each robot in the group receives a corresponding codegram from the CCM, which determines the robot's movement's speed, direction, and duration in order for it to reach the working segment endpoint (the one it is now traveling along) (table 2).

Table 2

The codogram from CCM

Robot ID | Speed | Direction, deg. | Time, msec |

1-N | 1-3 | 0-359 | 1-100 |

After receiving the codegram, the robot utilizes its low-level commands to turn on its rotation and movement actuators for the duration of the codegram. Following receipt of the robot's response codegram, the CCM compares its current coordinates with the working segment's endpoint and decides whether to move on to the next working segment or, in the event that the specified point is not accurately reached, to issue a command for additional movement. In the event that the robot reaches the final segment's finish point, the CCM decides when to stop moving along the route.

CCM algorithms:

In the preliminary preparation mode:

- Building a matrix of areas of terrain permitted for the robot's movement, taking into account its performance characteristics and cartographic data on the relief (slope and elevation angle), vegetation, soil and water surface.

- Building primary routes for surveying (without crossing the trajectory of movement with the trajectories of other robots).

- Algorithm for plotting a route and following the route: issuing control commands and monitoring the movement of each robot (direction and speed), monitoring its condition (operating time reserve).

- Assessing the proximity to the end point of the route and making a decision on reaching it by each robot.

- Making a decision on the presence of an obstacle on the robot's route to bypass it and returning to the route.

- Collecting information on the location of individual robots.

- Determining the presence of a communication channel with robots (in the absence of codograms from one of them, remember its last location).

- Algorithm for updating the obstacle matrix and plotting them on the map.

In the target scenario mode, in addition to paragraphs 2-7 of the preliminary preparation mode:

- Constructing the robot's route within the target scenario (taking into account the intersection of the trajectory with the trajectories of other robots).

- Making a decision on the interaction of robots in the event of an intersection of their trajectories.

- Actions upon reaching the scenario goal.

The environmental model (EM) includes a matrix of coordinates of areas permitted for robot movement, a matrix of obstacles, a matrix of current coordinates of the group robots' location, a matrix of states of (technical) robots, and algorithms for obtaining and calculating data for these matrices are stored in the knowledge base. The output information of the EM is a matrix of robot routes.

The matrix of coordinates of areas permitted for robot movement is built on the basis of enumerating terrain data from the ECM, limiting the zones available for movement based on the permissible steepness of the surface, the presence of vegetation or water surface, and soil properties.

The matrix of obstacles is compiled on the basis of information from robots in the preliminary preparation mode. The matrix of current coordinates of the group robots' location is updated at a certain time interval. The matrix of states of the group robots is updated at a certain time interval and includes information on their serviceability, range, direction, and speed of movement.

Based on this model, the robot must be equipped with the following sources of information about the external environment:

- GPS sensor for global positioning on digital maps;

- two ultrasonic distance sensors for detecting and recognizing obstacles;

- a compass as a rotation sensor to determine the orientation of the robot in space;

- a tilt angle sensor relative to the horizon;

- a movement detection system to determine situations of forced stop of the robot (it is possible to make a decision on the CCM).

- On-board CPU performs the following algorithms:

- Determining the presence of a communication channel with the CCM (in the absence of codegrams from the CCM, issue the "Stop" command);

- Determining its own location;

- Receiving and processing codegrams;

- Detecting and recognizing obstacles;

- Diagnostics of the state of actuators and battery.

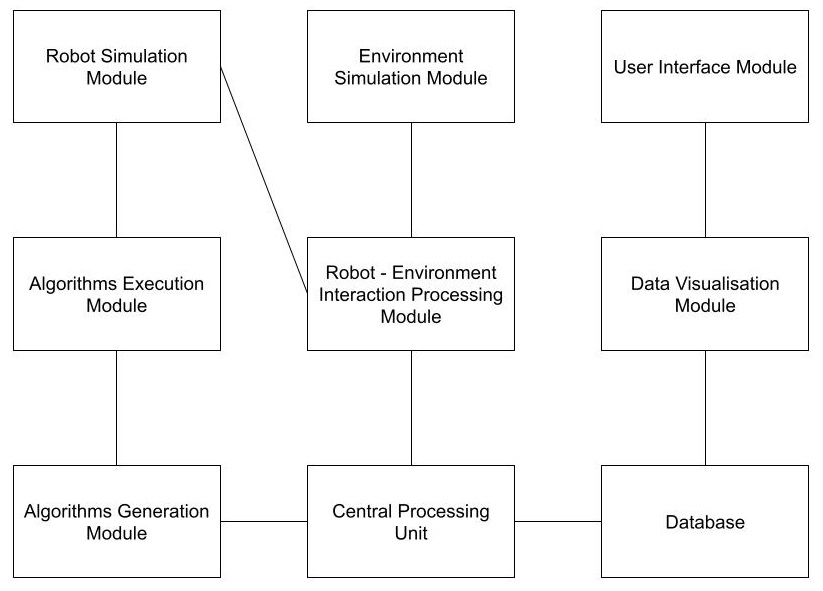

The system for modeling the purposeful group behavior of a team of robots within the framework of a multi-agent simulation model includes formalized models of robot agents and algorithms for their inter-agent interaction (fig. 2).

Fig. 2. Structure of the system for modeling the goal-oriented group behavior of a team of robots within the framework of a multi-agent simulation model

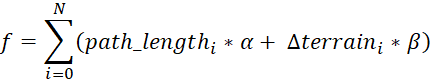

Suitable paths are generated by each robot of the group individually, then they are optimized in order to reduce their length and fulfill the condition of non-intersection with mountains. The loss function (quality function, loss) is calculated as path_length * length_weight + sum of height_changes * height_changes_weight, i.e.

, (1)

, (1)

Where:

– length of the path of the i-th route fragment;

– length of the path of the i-th route fragment; – route coefficient, which reduces the value of the path length;

– route coefficient, which reduces the value of the path length; – i-th landscape difference;

– i-th landscape difference; – terrain factor that increases the penalty for hitting a "mountain".

– terrain factor that increases the penalty for hitting a "mountain".

The route coefficient ![]() was taken as 0.01, and the terrain coefficient

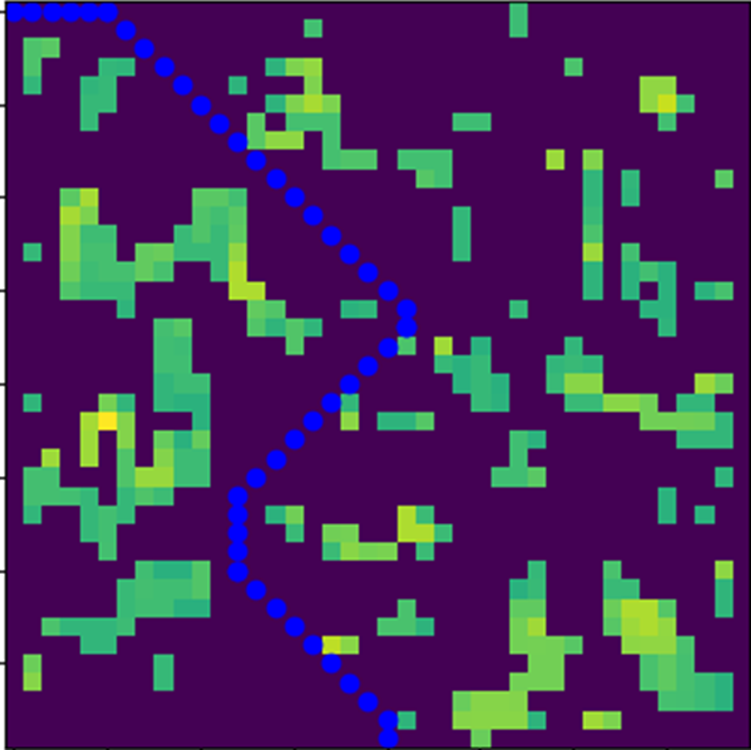

was taken as 0.01, and the terrain coefficient ![]() as 100, so that the algorithm optimizes the route, which will mainly run along the ‘plains’. The algorithm stops when the maximum number of iterations is reached. Let us have a landscape and the starting and ending points shown in figure 3. After each robot has searched for its optimal route, the CMU "glues" all the paths together based on the proximity of points from the routes of adjacent sections. The result of the simulation is the optimal route for the main robot.

as 100, so that the algorithm optimizes the route, which will mainly run along the ‘plains’. The algorithm stops when the maximum number of iterations is reached. Let us have a landscape and the starting and ending points shown in figure 3. After each robot has searched for its optimal route, the CMU "glues" all the paths together based on the proximity of points from the routes of adjacent sections. The result of the simulation is the optimal route for the main robot.

Fig. 3. Results of modeling the goal-oriented group behavior of a team of robots within the framework of a multi-agent simulation model

Conclusion

The impact of the quantity of reference points and iterations on the outcome of the shortest path search and the caliber of the created route was examined as a consequence of experimental investigations. Based on the experiment results, it was discovered that the number of reference points and iterations used to find the best route on the simulation field both positively affect the optimization results. This is because the final route improves significantly when the reference points are adjusted also significantly. Operations can be carried out in a mode that is nearly real-time because of the computation interval that the robot uses to decide which route to take. Mathematical model of autonomous distributed group is specified formally as a set of interacting state machines with internal states;

We believe that our results demonstrated that our group strategies were effective. Robots in the different conditions had little need to correct issues in the spatial configuration by getting closer on the terrain. While future work should explore if there are meaningful differences between them, we believe that the current model can be used in practical applications.

As a result, we have a domain-independent distributed algorithm that implements the nearly real-time self-organizing group of robots operating according to a pre-designed scenario.

.png&w=640&q=75)