I. Introductions

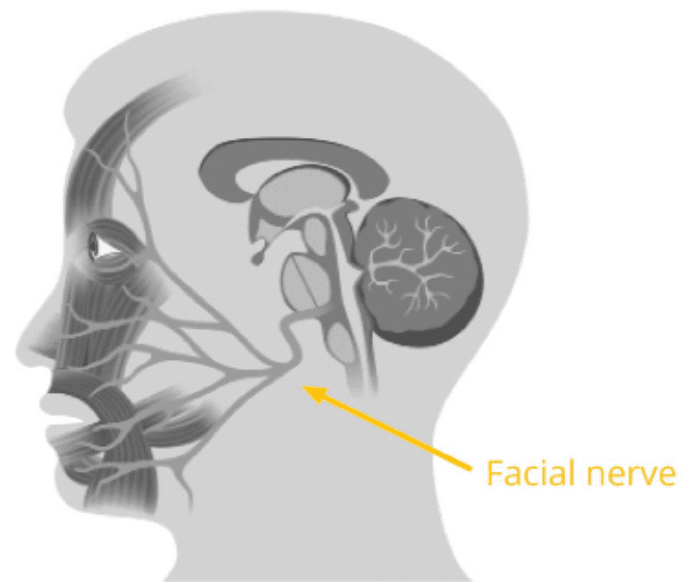

Our face is an intricate, highly differentiated part of our body - in fact, it is one of the most complex signal systems available to us. It includes over 40 structurally and functionally autonomous muscles, each of which can be triggered independently of each other. The facial muscular system is the only place in our body where muscles are either attached to a bone and facial tissue (other muscles in the human body connect to two bones), or to facial tissue only such as the muscle surrounding the eyes or lips. Almost all facial muscles are triggered by one single nerve - the facial nerve. There is one exception, though: The upper eyelid is innervated by the oculomotor nerve, which is responsible for a great part of eye movements, pupil contractions, and raising the eyelid.

Obviously, facial muscle activity is highly specialized for expression - it allows us to share social information with others and communicate both verbally and nonverbally. In short we could say: Facial expressions are movements of the numerous muscles supplied by the facial nerve that are attached to and move the facial skin.

The facial nerve emerges from deep within the brainstem, leaves the skull slightly below the ear, and branches off to all muscles like a tree. Interestingly, the facial nerve is also wired up with much younger motor regions in our neo-cortex (neo as these areas are present only in mammalian brains), which are primarily responsible for facial muscle movements required for talking.

As the name indicates, the brainstem is an evolutionary very ancient brain area which humans share with almost all living animals. Brainstem and motor cortex are specifically active dependent on whether a facial expression is involuntary or voluntary. While the brainstem controls involuntary and unconscious

expressions that occur spontaneously, the motor cortex is involved in consciously controlled and intentional facial expressions. Often, the amygdala (both left and right) is associated with processing of live-threatening, fearful events or stimuli of high sexual appeal and bodily pleasure. Besides fear and pleasure processing, the amygdala has been found to be generally responsible for autonomic functions associated with emotional arousal.

Fig. 1

In everyday language, emotions are any relatively brief conscious experiences characterized by intense mental activity and a high degree of pleasure or displeasure. In scientific research, a consistent definition has not been found yet. There’s certainly conceptual overlaps between the psychological and neuroscientific underpinnings of emotions, moods, and feelings. Facial expression plays the major role in non-verbal communication, according to Meharabian [1], 55% communicative cues can be judge by facial expression. Back to the year 1872, Darwin published The Expression of the Emotions in Man and Animals, in which he argued that all humans, and even other animals, show emotion through remarkably similar behaviours. Darwin treated the emotions as separate discrete entities, or modules, such as anger, fear, disgust, etc. Many different kinds of research-neuroscience, perception and cross-cultural evidence –show that Darwin's conceptualization of emotions as separate discrete entities is correct. In 1971, Paul Ekman and Wallace V. Friesen’s facial expression work [2] gave the correlation between a person’s emotional state & psychological state, which described the FACS. It is based on the muscular contractions which produce our facial expressions. The authors also defined 6 basic face expressions: Happy, Surprise, Disgust, Sad, Angry, and Fear (fig. 2). They are named as 6 universal emotions and are used by most researchers. Lastly the seventh emotion was added with title – contempt.

|

|

|

| |||

|

|

|

|

| ||

Fig. 2. Seven basic expressions

There is an ongoing discussion in emotion research on how the different emotions could be distinguished from each other:

- Discrete emotion theory assumes that the seven basic emotions are mutually exclusive, each with different action programs, facial expressions, physiological processes, and accompanying cognitions.

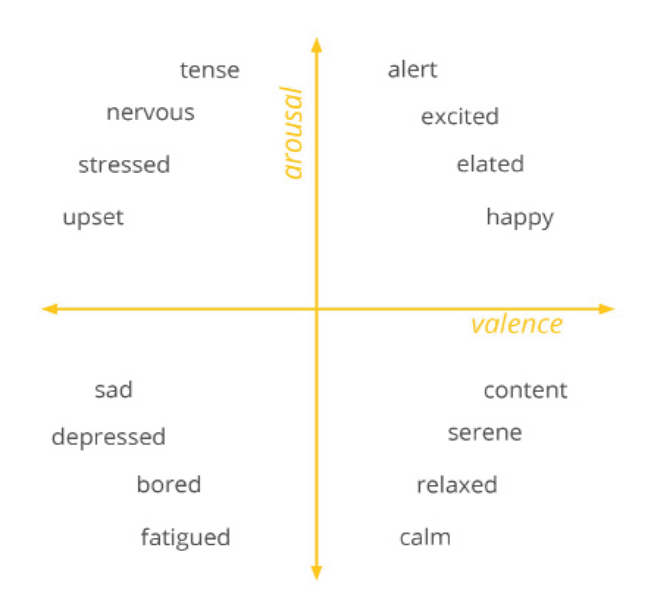

- Dimensional models assume that emotions can be grouped and arranged along two or more dimensions. Most dimensional models use valence (positive vs. negative emotions) as horizontal axis and arousal (activating vs. calming emotions) as vertical axis. With valence and arousal, more subtle emotional classifications are possible - breaking “happiness” into a less aroused “happy” state and a more aroused “elated” state, for example. Again, facial expressions are core indicators of underlying emotional states.

Fig. 3

With facial expression analysis you can test the impact of any content, product or service that is supposed to elicit emotional arousal and facial responses - physical objects such as food probes or packages, videos and images, sounds, odors, tactile stimuli, etc. Particularly involuntary expressions as well as a subtle widening of the eyelids are of key interest as they are considered to reflect changes in the emotional state triggered by actual external stimuli or mental images.

Now which fields of commercial and academic research have been adopting facial expression analysis techniques lately? Here is a peek at the most prominent research areas:

- Consumer neuroscience and neuromarketing;

- Media testing & advertisement;

- Psychological research;

- Clinical psychology and psychotherapy;

- Medical applications & plastic surgery;

- Software UI & website design;

- Engineering of artificial social agents (avatars).

II. Facial expression recognition

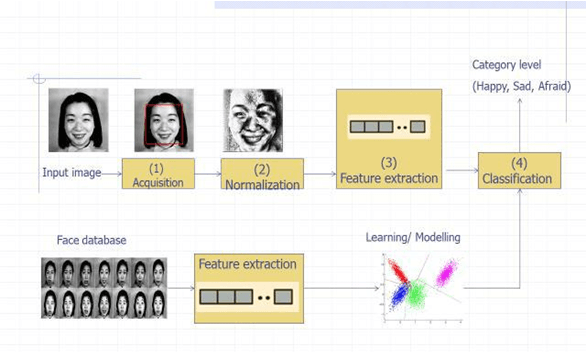

Facial expression recognition (FER) provides machines a way of sensing emotions that can be considered one of the mostly used artificial intelligence and pattern analysis applications. Facial expression recognition can be divided into three major steps: (1) face acquisition stage to automatically find the face region for the input images; (2) normalization of intensity, uniform size and shape; (3) facial data extraction and representation-extracting and representing the information about the encountered facial expression in an automatic way; and (4) facial expression recognition step that classifies the features extracted in the appropriate expressions. Figure 4 illustrates the block diagram of FER systems

Fig. 4. Block diagram of Facial expression recognition system

III. Facial expression recognition systems

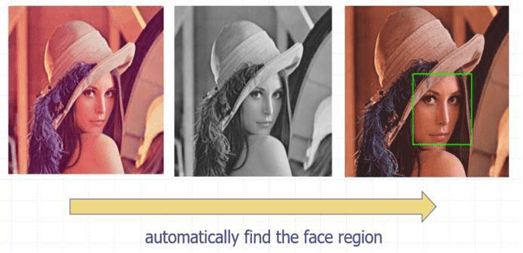

1. Face Acquisition

Face Acquisition is a process of localizing and extracting the face region from the background.

Fig. 5. Detect face region in the image

The Viola-Jones algorithm is a widely used mechanism for facial detection. The method was devised by Viola and Jones [4] in the year 2001 that allows the detection of image features in real-time. The algorithm combines four key concepts:

- Simple rectangular features, called Haar-like features.

- Integral image for rapid features detection

- AdaBoost machine-learning method

- Cascade classifier to combine many features efficiently

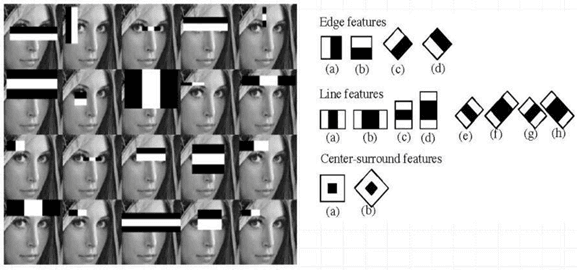

The Viola and Jones algorithm uses Haar-like features to detect faces (fig. 6). Given an image, the algorithm looks at many smaller subregions and tries to find a face by looking for specific features in each subregion. It needs to check many different positions and scales because an image can contain many faces of various sizes.

Fig. 6. Haar-like features for face detection

Viola-Jones was designed for frontal faces, so it is able to detect frontal the best rather than faces looking sideways, upwards or downwards.

2. Normalization

Normalization is a process which can be used to improve the performance of the FER system and it can be carried out before feature extraction process. The aim of the phase is to obtain images which have normalized intensity, uniform size and shape. This phase includes different types of processes such as orientation normalization, image scaling, contrast adjustment, and additional enhancement to improve the expression frames.

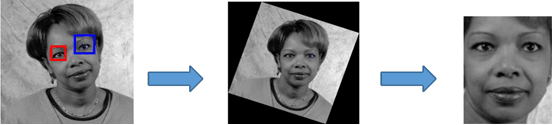

– Orientation normalization: it is carried out by a rotation based on the eyeball’s location and an affine translation defined by locations of the eyeballs.

Fig. 7. Orientation normalization

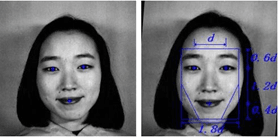

– Scaling normalization: facial region images are scaled and cropped to a normalized size for different experiments. Geometric face model [5] are proposed to address the task.

Fig. 8. Geometric face model [5]

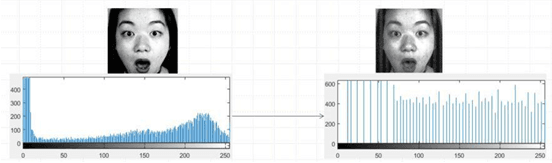

– Brightness normalization: The face images captured at different times or positions often have different brightness. In order to reduce the affection of the brightness, histogram equalization can be used to normalize the contrast of the images, and median filter can be used to linearly smooth the image. Histogram equalization transforms the values in an intensity image so that the histogram of the output image is approximately flat. So that, it is able to enhance contrast of images and make images features more distinguishable. Figure 9 shows an example of face images with their histogram before and after the normalization process.

Fig. 9. Normalize an image using Histogram equalization

3. Feature extraction

After the presence of a face has been detected in the observed scene, the next step is to extract the information about the encountered facial expression in an automatic way. Feature extraction is finding and depicting of positive features of concern within an image. These features, then, can be used as an input to the classification. Feature extraction can be categorized into two types and they are geometric based and appearance based.

The geometrically based feature extraction comprises eye, mouth, nose, eyebrow, other facial components and the appearance based feature extraction comprises the exact section of the face. Examples of geometric based are principal component analysis (PCA), Linear Discriminant Analysis (LDA), Kernel methods or Trace Transform. PCA method [6, 7], which is called eigenfaces in [8, 9] is widely used for dimensionality reduction and recorded a great performance in face recognition. Contrasting the PCA which encodes information in an orthogonal linear space, the LDA method encodes discriminatory information in a linear separable space of which bases are not necessarily orthogonal. Researchers have demonstrated that the LDA based algorithms outperform the PCA algorithm for many different tasks [10, 11].

Appearance-based methods present the appearance (skin texture) changes of the face, such as wrinkles and furrows. The appearance features can be extracted on either the whole-face or specific regions in a face image using image filters, such as Gabor wavelets. The Gabor filter, which was originally introduced by Dennis Gabor, 1946 [12], is widely used in image analysis, pattern recognition, and so forth.

Fig. 10. Gabor filter bank feature images calculated for the face image from JAFFE database

4. Classification

Two basic problems should be solved in this phase: define a set of categories/ classes and select of a classification mechanism. Firstly, expressions can be classified in term of “typical” emotions as defined by Paul Ekman [2]. Secondly, given the extracted facial features, the expression are identified by recognition engines. Many classification methods have been employed in the FER systems, such as support vector machine (SVM) [13], random forest [14], AdaBoost [15, 16], decision tree [17], naïve Bayes [18], multilayer neural networks and K-nearest neighbours, hidden Markov model (HMM) and deep neural networks.

IV. Facial expression recognition datasets

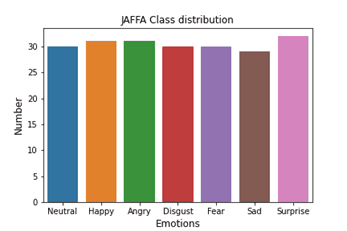

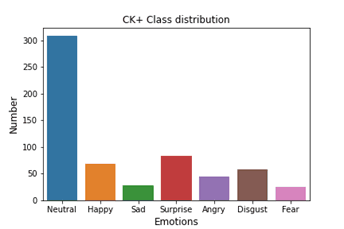

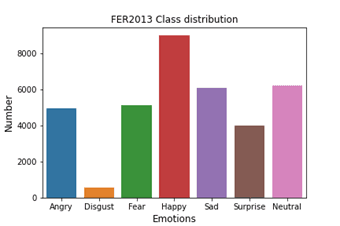

In this section, we discuss the publicly available datasets that are widely used in our reviewed papers. Table provides an overview of these datasets, including the number of image or video samples, number of subjects, collection environment, and expression distribution. Approach to collect FER-related data was mostly through the images captured in the laboratory, such as JAFFE [3] and CK+ [15], in which volunteers make corresponding expressions under particular instructions. However, since 2013, emotion recognition competitions have collected large-scale and unconstrained datasets, for example, FER2013 [16] queried automatically by the Google image search API. This implicitly promotes the transition of FER from lab-controlled to real-world scenarios.

Таble

An overview of the facial expression recognition datasets

|

Dataset |

Samples |

Resolution |

Subject |

Collection Env. |

Expression distribution |

|

JAFFE |

213 images (Frontal and 30-degree images) |

256x256 pixel grayscale |

10 |

Lab |

|

|

CK+ (2000) |

593 image sequences |

640x490 for grayscale, 640x480 for 24-bit colour |

123 |

Lab |

|

|

FER 2013 (2013) |

35.887 images |

48x48 pixel grayscale |

N/A |

Web |

|

V. Challenges of facial expression recognition

The problem of facial expression recognition requires proper techniques with challenges of different facial expression intensity (fig. 11), pose variations, occlusion, aging and resolution either in the frame of stationary object or video sequencing images. For example, the variation in illumination levels may affect the accuracy of extracting face features. Researchers have attempted to improve robustness in the shifting lighting conditions in various stages of the FER systems. Above all, most existing approaches focus on lab-controlled conditions in which the faces are often in the frontal view; but in real-world environments, the frontal view is not always available and, thus, causes a challenge in detecting the facial expressions.

Fig. 11. Intensity of Facial Expression examples of happy (A), sad (B), and fearful (C)

.png&w=640&q=75)