Introduction

In the contemporary business landscape, the ability to efficiently generate text based on specific data sets is becoming increasingly crucial [5]. This need spans various sectors, each with unique requirements and applications. Here, we explore three distinct examples where text generation is particularly valuable:

Financial Data Analysis: In the financial sector, there's a significant demand for real-time, data-driven text generation to interpret and communicate market trends [14]. For instance, analyzing a stock ticker's performance requires transforming complex financial data into comprehensible text reports. These reports might include insights on stock price fluctuations, market trends, and investment recommendations based on the analysis of vast amounts of historical and current financial data. Automated text generation in this context not only saves time but also provides a scalable way to deliver personalized financial advice or updates to a broad range of clients.

Legal Documentation: The legal field often involves the generation of documents based on specific legal data. This might include creating contracts, legal briefs, or case summaries where the input is a set of legal precedents, clauses, and case-specific information. Text generation tools can help in drafting these documents by automating the integration of relevant legal clauses and case law into a coherent document, tailored to the specifics of each case [6].

Marketing Content Creation: In the realm of marketing, businesses often need to produce a high volume of creative and engaging content. This includes texts for banners, brochures, and digital marketing campaigns. Here, text generation can aid in creating diverse and attention-grabbing content that resonates with different target audiences. By inputting data about customer preferences, market trends, and product specifics, businesses can leverage automated systems to generate unique and compelling marketing copy, significantly reducing the time and effort involved in the creative process [7].

In each of these examples, the ability to convert specific data into clear, accurate, and contextually relevant text is of paramount importance. This is where technologies like RTT become invaluable, offering efficient, scalable, and cost-effective solutions for automated text generation across various business sectors.

Limitations of Large GPT Models in Certain Business Contexts for Text

Generation

While Generative Pre-trained Transformer (GPT) models have revolutionized text generation, their deployment in business contexts is not without challenges. These limitations become particularly evident when considering the requirements of specific business applications:

Cost and Resource Intensity: Large GPT models require substantial computational resources for both training and inference. This translates to high operational costs, making them financially impractical for small to medium-sized businesses or applications with limited budgets. The need for robust hardware or cloud-based services to run these models further escalates costs and limits accessibility.

Quality Control and Hallucinations: GPT models, despite their advanced capabilities, can sometimes generate content that is factually incorrect or irrelevant, a phenomenon known as 'hallucination' [2, 11]. In contexts like financial reporting or legal documentation, where accuracy is paramount, these errors can have significant repercussions. Ensuring the quality and reliability of the output often requires additional layers of human review and intervention, which can be time-consuming and counterproductive to the goal of automation.

Overhead in Customization and Training: Tailoring GPT models to specific business needs often involves additional training on specialized data sets. This process is not only resource-intensive but also requires expertise in machine learning and natural language processing. Small businesses or those without technical proficiency in AI may find this requirement a significant barrier.

Privacy and Data Sensitivity: Businesses dealing with sensitive data, such as personal financial information or confidential legal documents, might be reluctant to use cloud-based GPT services due to privacy concerns [4]. The risk of data breaches or non-compliance with data protection regulations can be a deterrent.

Generic Output: While GPT models are adept at generating human-like text, they can sometimes produce outputs that lack the specificity and customization required for certain business applications [8]. For instance, marketing content might require a unique brand voice or specific call-to-action that generic GPT outputs fail to capture accurately.

Scalability Issues: In situations where text generation needs to be rapidly scaled up, the resource demands of GPT models can be a bottleneck. Smaller organizations might struggle to scale their operations effectively using these models without significant investment in infrastructure.

In summary, while GPT models offer powerful text generation capabilities, their practical application in business contexts is often limited by factors like cost, resource demands, accuracy concerns, and the need for specialized customization. These constraints necessitate the exploration of alternative methods like RTT, which can provide a more accessible, cost-effective, and tailored solution for business-specific text generation needs.

Introduction to RTT as an Alternative Method

Recognizing the limitations of GPT models in certain business contexts, it becomes crucial to explore alternative technologies. Recursive Text Templates (RTT) emerge as a complementary approach, designed to address specific needs where GPT models may not be the most efficient solution. It is essential to note that RTT is not a replacement for GPT models; rather, it serves as a different tool for different contexts.

RTT operates on the principle of using predefined templates and data models to generate text [5]. This method is particularly effective in situations where the text output needs to be highly structured and based on specific, predictable data inputs. The primary advantages of RTT include:

Cost-Effectiveness and Lower Resource Requirements: Unlike GPT models, RTT does not require extensive computational resources. This makes it a more viable option for businesses with limited budgets or those that cannot invest in high-end hardware or cloud computing services.

Predictability and Control: RTT provides a higher degree of control over the output since it relies on user-defined templates. This predictability is crucial in scenarios where the accuracy and consistency of information are paramount, such as financial reporting or legal documentation.

Ease of Customization: Since RTT works with templates provided by users, it is easier to tailor to specific business needs. This customization does not require extensive machine learning expertise, making RTT accessible to a broader range of users and businesses.

Data Privacy: RTT can be implemented locally without the need for cloud-based processing. This aspect is particularly appealing for handling sensitive data, as it alleviates concerns around data privacy and security [1].

Efficiency in Specific Scenarios: In cases where the text generation task is highly structured and does not require the creative or conversational capabilities of GPT models, RTT can be more efficient. It provides a straightforward solution for generating text based on a set of defined parameters.

However, it is important to acknowledge the limitations of RTT. Unlike GPT, RTT does not have the capability for natural language understanding or generation beyond the scope of its templates. It lacks the versatility of GPT models in handling open-ended tasks or generating creative content. As such, RTT is best suited for applications where the requirements are well-defined and can be encapsulated within a structured template format.

In conclusion, RTT presents itself as a practical alternative in scenarios where the complexity and cost of GPT models are not justified. It offers a tailored approach for specific text generation tasks, complementing rather than competing with the capabilities of GPT technologies. This paper explores the potential of RTT, providing insights into its application, advantages, and the contexts where it can be most effectively employed.

RTT: Concept and Design

Recursive Text Templates (RTT) stand as a method focused on generating text by iterating over a set of predefined templates and data models. This approach differs fundamentally from machine learning-based text generation models like GPT, as it relies on explicit rules and structured data rather than learned patterns from large datasets.

Template Definition and Structure: At the heart of RTT are the templates, which are predefined text structures containing placeholders for data insertion [5]. These placeholders are designed to be replaced by actual data values or outputs from other templates. A typical RTT template might look like "Hello, {{ name }}! Your account balance is {{ balance }}", where {{ name }} and {{ balance }} are placeholders.

Data Binding: The placeholders in the templates are bound to specific data points or other templates [5]. This data can be static, like a user's name, or dynamic, such as a real-time financial metric. The binding is key to ensuring that the correct data is inserted into the template at the time of text generation.

Recursive Processing: The unique feature of RTT is its recursive nature [3]. Some placeholders in templates might be linked to other templates rather than direct data points. When generating text, RTT will resolve these nested templates first before finalizing the top-level template. This recursive process continues until all placeholders are replaced with actual data, resulting in a complete and coherent text output.

Contextual Adaptation: RTT can adapt to different contexts by changing the data model or the templates themselves. This flexibility allows for the generation of varied text outputs from the same set of templates, simply by altering the underlying data or by using different combinations of nested templates.

Loop Detection and Resolution: One challenge in recursive systems like RTT is the possibility of infinite loops, where a template repeatedly calls itself or another in a never-ending cycle. RTT systems typically incorporate mechanisms to detect and resolve such loops, ensuring the process concludes with a finite and meaningful output.

Efficiency in Processing: Since RTT operates on predefined templates and straightforward data binding, its processing overhead is significantly lower than that of complex AI models. This efficiency is particularly advantageous in scenarios with limited computational resources.

RTT's approach is most effective for applications where the text output needs to follow a specific format or structure, and the variability lies primarily in the data being inserted into this structure. Examples include generating personalized reports, notifications, or content where the structure remains constant, but the data changes. This method offers a high degree of predictability and control, making it suitable for scenarios where accuracy and consistency are critical, and the scope of text variation is well-defined and bounded [5].

Comparison with Other Templating Engines

In considering the unique features of Recursive Text Templates (RTT), it is instructive to compare it with other well-known templating engines such as Handlebars, Mustache, Razor, EJS, Pug, Liquid, Twig, among others [12, 15, 16]. These engines, widely used in web development and content generation, also utilize a template-based approach for text output.

Like RTT, these templating engines employ a system of placeholders and partials – reusable template snippets that can be embedded within other templates. This design promotes modularity and reusability, key aspects also seen in RTT. However, there are noteworthy differences in their implementation and capabilities.

One of the primary distinctions lies in the complexity and scope of the templates. While engines like Handlebars or Twig offer a range of built-in helpers and logical constructs to manage template logic, RTT focuses more on the recursive processing of templates. This means that RTT is particularly adept at handling nested templates where outputs of one template feed into another, creating a layered text generation process.

Furthermore, the context in which these templating engines are employed also differs. Engines like Razor and EJS are often integrated into web frameworks and are used extensively in web application development. In contrast, RTT’s utility shines in scenarios where text generation is driven by structured data and requires a high degree of predictability and control, regardless of the web development context.

It is also important to note that while RTT and other templating engines share common ground in their basic principle of using templates for text generation, RTT’s approach to recursion and its integration with data models offer a distinct methodology, especially in business scenarios where the text output needs to conform to specific formats or standards.

By understanding the similarities and differences between RTT and these other templating technologies, we can appreciate the unique niche that RTT occupies. Its specific approach to handling recursive templates and structured data makes it a valuable tool in contexts where other templating engines might not be as efficient or applicable.

Implementation

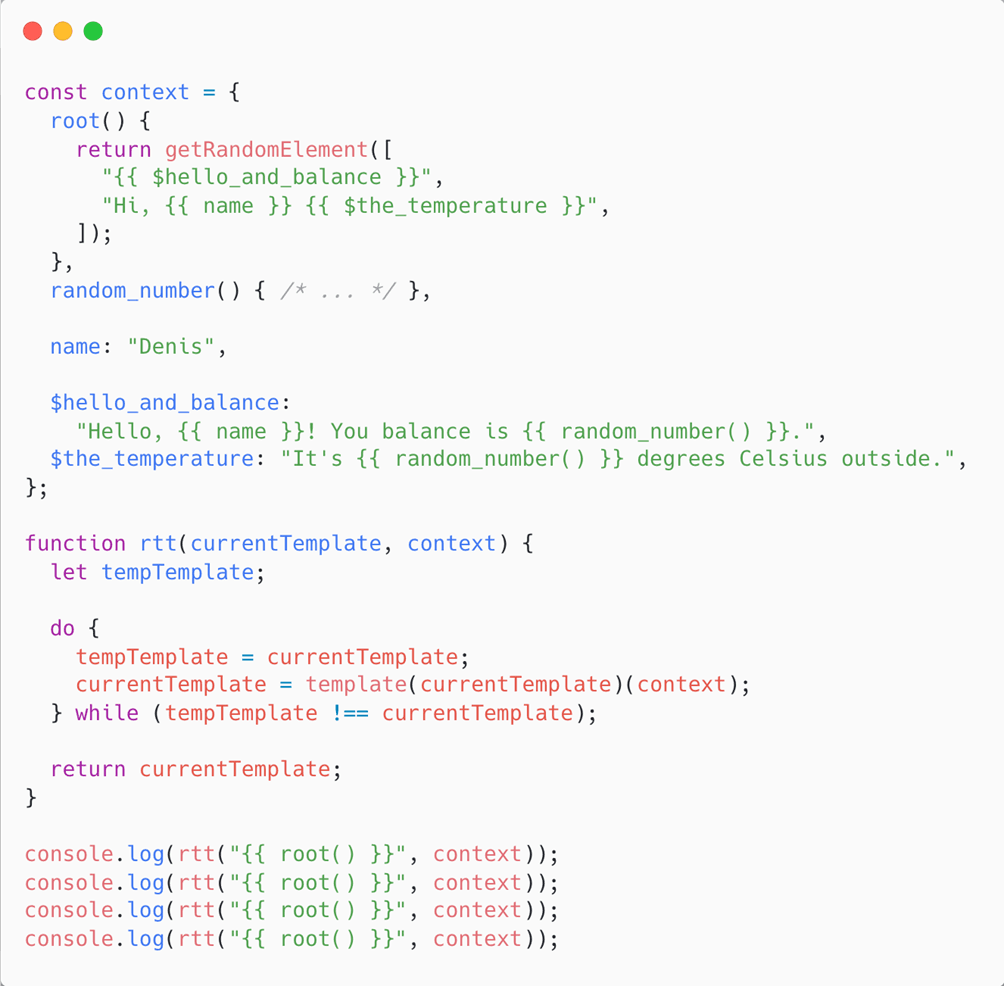

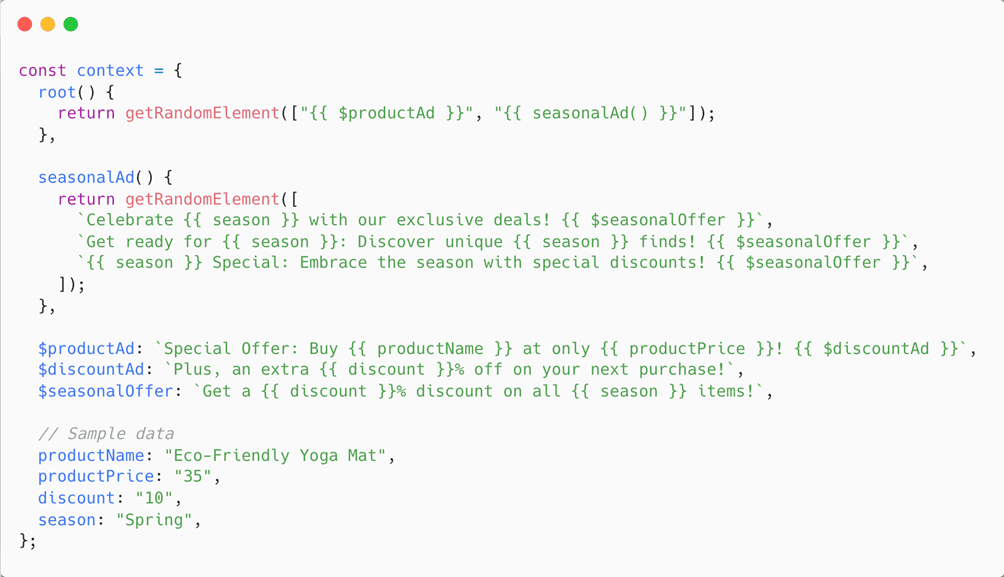

To illustrate the practical implementation of Recursive Text Templates (RTT), we can examine a specific coding example. This implementation uses JavaScript, leveraging the lodash library's templating functionality to process the templates [17]. The code exemplifies how RTT operates, showcasing its recursive nature and the process of dynamically generating text from templates and a context object. The code is shown in figure 1.

Fig. 1. RTT function example

Code Overview

The provided JavaScript code serves as a concrete example of how RTT can be implemented in a programming environment. It consists of several key components:

Random Element Function: The getRandomElement function is a utility that randomly selects an element from an array [10]. This function is used to introduce variability in the templates, showcasing how RTT can handle dynamic content generation.

Context Object: The context object is crucial as it defines the data and functions used in the templates. It includes simple data points like name, functions like random_number() [13] that generate dynamic data, and nested templates like $hello_and_balance and $the_temperature. The root() function within this context is particularly important as it serves as the entry point for the RTT process, selecting between different template options.

RTT Function: The rtt function embodies the core of the RTT methodology. It takes a root template and a context object as inputs. The function uses the lodash template function to iteratively process the current template. It resolves the placeholders by referencing the context object and continues this recursive process until the output stabilizes, meaning no further changes occur in the template.

Execution and Output: Finally, the code executes the RTT process by calling console.log(rtt("{{ root() }}", context)). This line initiates the RTT with the root template and the defined context. The output is a dynamically generated text based on the template chosen by the root() function and the data or nested templates within the context.

This implementation is a clear example of how RTT can be applied in a programming context to generate text. It demonstrates the flexibility of RTT in handling dynamic data, the ease of setting up templates, and the process of resolving nested templates in a recursive manner. Such an implementation can be adapted to various business needs, providing a structured yet dynamic way of generating text.

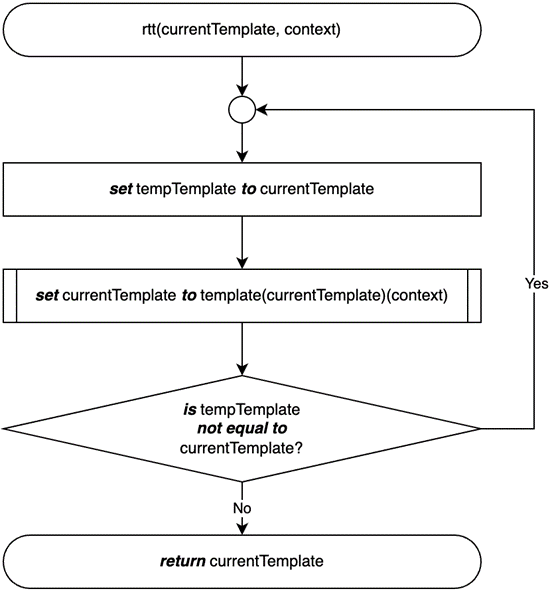

Flowchart

To further elucidate the operational mechanism of the rtt function, a flowchart diagram is provided below (fig. 2). This visual representation offers a clear and concise overview of the function’s logic and processing steps, allowing readers to easily grasp the sequence of operations that RTT undergoes during text generation. The flowchart serves as an invaluable tool for understanding the intricate yet systematic process inherent in the RTT methodology.

Fig. 2. Flowchart of rtt function

Illustration

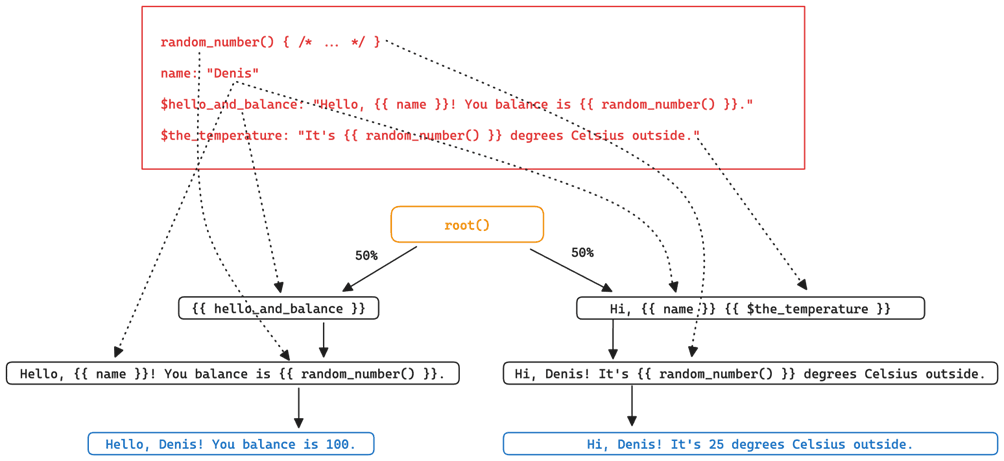

We can illustrate the decision-making process inherent in the code through the following diagram, which effectively represents a decision tree (fig. 3).

Fig. 3. Decision tree

Central to this diagram is the root element, serving as the initial node from which the process diverges based on conditional probabilities. The tree branches into two distinct paths, each with an associated probability of 50%, signifying an equal likelihood of either outcome.

On the left branch, the tree depicts the scenario where root returns the template {{ $hello_and_balance }}. This pathway is indicative of one potential output generated by the RTT process, where specific values from the context are interpolated into the designated template. The right branch, alternatively, represents the execution pathway for the Hi, {{ name }} {{ $the_temperature }} template. This branch showcases an alternate flow of execution, demonstrating the flexibility and dynamic nature of RTT in template selection and text generation.

Above this bifurcating structure, the diagram includes a depiction of the 'context' block. Arrows emanate from this block, pointing towards the respective placeholders within each branch of the tree. These arrows symbolize the process of value insertion, where data from the context is seamlessly integrated into the templates. This visual representation not only clarifies the operational mechanics of RTT but also underscores the method's capability for contextual adaptability and recursive processing.

The decision tree, thus, serves as a comprehensive visual aid, elucidating the underlying logic and flow of the RTT system as implemented in the provided code example. It effectively communicates the dual aspects of structured decision-making and dynamic content generation that are central to the RTT methodology.

Output

In the context of demonstrating the functionality and output variability of the Recursive Text Templates (RTT) system, a series of four executions of the provided code example yields diverse results, as follows:

"Hi, Denis It's 15 degrees Celsius outside."

"Hello, Denis! Your balance is 42."

"Hello, Denis! Your balance is 1337."

"Hi, Denis It's 10 degrees Celsius outside."

These results highlight several key aspects of the RTT system:

Dynamic Content Generation: Each execution of the code results in a different output, underscoring the RTT system's ability to generate dynamic content. This variability is a direct consequence of the getRandomElement function within the root() method, which randomly selects one of the two template options.

Contextual Data Integration: The consistent presence of the name "Denis" in all outputs illustrates the RTT system's capability to integrate contextual data into the templates. This data, predefined in the context object, is seamlessly inserted into the appropriate placeholders in the templates.

Randomized Data Output: The variation in numbers - specifically in the temperature and account balance - across different executions showcases the RTT's use of the random_number() [13] function. This function generates a random number each time it's called, demonstrating how RTT can handle dynamic data that changes with each execution.

Template Flexibility: The alternation between the two templates - one for the temperature and the other for the account balance - reflects the flexibility of the RTT system in template selection. This adaptability is crucial for applications requiring a range of different text outputs based on the same set of templates.

These executions effectively demonstrate the practical utility of RTT in generating varied, context-specific text outputs. The system's ability to combine fixed and dynamic data, coupled with its template versatility, makes it a powerful tool for tailored text generation in various business and technical scenarios.

Application of RTT in Business Contexts

The Recursive Text Templates (RTT) system finds its practicality in various business scenarios, offering efficient solutions for specific text generation needs:

Customer Service Communications: RTT can automate the generation of personalized customer service messages. For instance, updating clients on the status of their orders or addressing common queries can be templated, with specific details like customer names, order numbers, and dates being dynamically inserted [9].

Financial Reporting: In finance, RTT can be used to generate regular reports, such as account statements or stock market updates. By inserting real-time financial data into predefined templates, businesses can produce accurate, up-to-date reports efficiently.

Legal Document Drafting: The legal industry can utilize RTT for the drafting of standard legal documents, such as contracts or notices. Key information like party names, dates, and specific clauses can be automatically populated into a structured document template [6].

Marketing Content Creation: Marketing teams can leverage RTT to produce varied content across different platforms. For instance, creating custom-tailored product descriptions for e-commerce sites or generating promotional emails with personalized greetings and offers.

Operational Reports: In operational contexts, RTT can facilitate the generation of regular reports, such as inventory levels, sales summaries, or performance metrics. This application ensures consistency in report formatting while allowing for the dynamic insertion of the latest data [9].

In each of these scenarios, RTT enhances efficiency, ensures consistency, and provides scalability in text generation tasks. Its ability to integrate specific, context-driven data into a structured text format makes it a valuable tool across diverse business sectors.

Discussion on how GPT models can enhance RTT

In the realm of text generation technologies, the synergistic potential between GPT models and Recursive Text Templates (RTT) is significant. GPT models, with their advanced capabilities in natural language understanding and generation, can greatly enhance the effectiveness of RTT.

One of the primary ways GPT models can augment RTT is through the generation of template content. While RTT excels in populating predefined templates with data, GPT models can be utilized to create or expand these templates themselves. By generating a diverse range of template structures and phrases, GPT models can introduce a level of creativity and variability that might be challenging to achieve manually. This can be particularly beneficial in applications like marketing content creation or customer service, where engaging and varied text is crucial.

Furthermore, GPT models can assist in refining and optimizing RTT templates. By analyzing large datasets and extracting patterns and styles, GPT can suggest improvements or variations in the templates, making them more effective and context-appropriate. For example, in legal document drafting, GPT models can help formulate different versions of a template that aligns with various legal contexts or jurisdictions.

Lastly, GPT models can play a role in error detection and quality control within RTT systems. By reviewing and analyzing the outputs generated by RTT, GPT can identify potential errors or inconsistencies, offering corrections or suggestions for improvement. This collaboration could significantly enhance the accuracy and quality of the text generated by RTT systems.

In conclusion, while RTT and GPT models are distinct technologies with different core capabilities, the integration of GPT models into the RTT framework presents a pathway for enhancing the functionality, creativity, and effectiveness of RTT in various text generation applications. This synergy could lead to more sophisticated, adaptable, and high-quality text generation solutions, suitable for a broad range of business and technical needs.

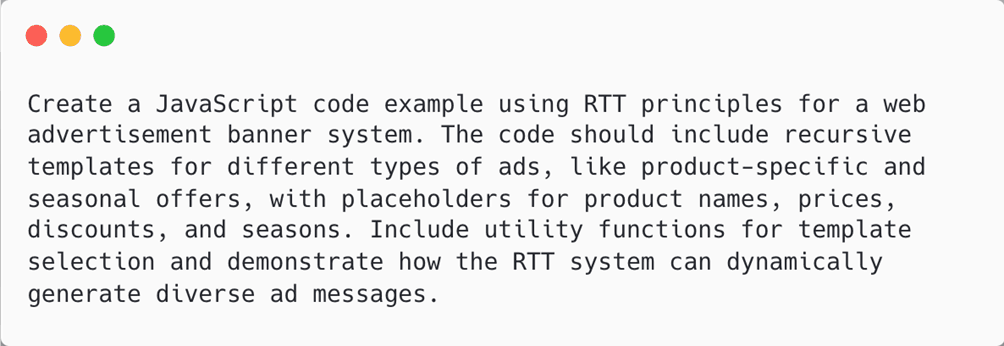

To illustrate the collaborative potential of GPT models and RTT, we present an example where ChatGPT is employed to generate templates and a context object for a web advertisement banner system. This example underscores how GPT models can creatively and effectively contribute to the RTT framework, particularly in designing recursive templates that cater to dynamic advertising needs.

For this demonstration, the following prompt was provided to ChatGPT-4 (fig. 4).

Fig. 4. ChatGPT prompt to generate RTT function

This code that was generated by ChatGPT (fig. 5) serves as a practical application of RTT, embodying the concept through a well-structured JavaScript implementation. It showcases a series of user-defined templates within a context object, each designed to dynamically generate text for different advertising scenarios. The getRandomElement function injects a degree of randomness and variability into the text generation process. This feature is particularly important in realistic scenarios where diverse and engaging responses are needed to capture the attention of different audience segments.

Fig. 5. RTT code generated by ChatGPT

The context object contains various templates, including productAd() and seasonalAd(), along with supporting templates like discountAd() and seasonalOffer(). These templates are crafted to be recursive, where certain templates can call upon others, allowing for complex and layered text outputs. For instance, seasonalAd() randomly selects from multiple seasonal advertising messages, each potentially calling the seasonalOffer() template for additional details.

The implementation will output:

“Special Offer: Buy Eco-Friendly Yoga Mat at only 35! Plus, an extra 10% off on your next purchase!”

“Celebrate Spring with our exclusive deals! Get a 10% discount on all Spring items!”

“Spring Special: Embrace the season with special discounts! Get a 10% discount on all Spring items!”

Furthermore, the implementation reflects the potential integration of AI models, such as ChatGPT, in the development of RTT systems. AI models can assist in creating and refining these templates and context objects, making the process more efficient and tailored to specific applications. In this case, the templates are designed to be adaptable and relevant to web advertising, a domain where personalized and dynamic content is crucial.

Limitations and Scope

When evaluating the utility of Recursive Text Templates (RTT) in comparison to the capabilities of Generative Pre-trained Transformer (GPT) models, particularly in dialogic contexts, it is crucial to understand the inherent limitations of RTT as well as the areas where it excels.

Limitations of RTT

Lack of Natural Language Understanding and Generation: Unlike GPT models, RTT lacks the ability to understand or generate natural language on its own. It operates strictly within the confines of predefined templates and data, which limits its ability to handle unstructured or unpredictable text inputs.

Non-dialogic Nature: RTT is not designed for dialogic interactions. It cannot engage in conversations or respond to queries in a dynamic, context-aware manner like GPT models. This limitation restricts its use in applications requiring interactive communication, such as chatbots or virtual assistants.

Dependence on Predefined Templates: The efficacy of RTT is contingent upon the quality and comprehensiveness of the templates provided. Crafting effective templates requires foresight and an understanding of the specific contexts in which they will be used, which can be a time-consuming process [5].

Limited Creativity and Flexibility: RTT's outputs are bound by the structure and content of its templates. This constraint means that RTT lacks the creative flexibility of GPT models, which can generate diverse and novel text based on a wide range of inputs [5].

Areas Where RTT Can Be More Applicable

Despite these limitations, RTT holds significant value in specific applications.

Structured Content Generation: RTT is ideal for scenarios where text output needs to adhere to a specific format or structure, such as legal documents, financial reports, or standardized customer communication.

Resource-Constrained Environments: In situations where computational resources are limited, RTT provides a viable alternative for text generation without the heavy computational overhead required by large GPT models.

Data Privacy Concerns: RTT can operate effectively without the need for cloud processing or external data, making it suitable for use cases with stringent data privacy requirements.

Predictability and Control: For applications where predictability and control over the text output are paramount, RTT offers a reliable solution. Businesses can ensure consistency and accuracy in the generated text by carefully designing the templates.

Cost-Effectiveness: RTT can be more cost-effective than GPT models, especially for small to medium-sized businesses or applications that do not require the advanced capabilities of AI-driven natural language processing.

In summary, while RTT does not possess the dialogic and generative capabilities of GPT models, it excels in applications that demand structured, predictable, and efficient text generation. Its suitability in specific contexts makes it a valuable tool in the broader landscape of automated text generation technologies.

Comparison with GPT

Following the discussion on the specific scenarios where Recursive Text Templates (RTT) prove more applicable, it is instructive to present a direct comparison between RTT and Generative Pre-trained Transformer (GPT) technologies. This comparison is crucial for understanding the distinct capabilities, use cases, and requirements of each technology. The table below (table 1) provides a comprehensive, fact-based juxtaposition of RTT and GPT, outlining their core functionalities, data handling capabilities, computational demands, and more. Such a comparison is essential for readers to discern the most suitable technology for their specific text generation needs.

Table

RTT vs GPT comparison

|

Aspect |

Recursive Text Templates (RTT) |

Generative Pre-trained Transformer (GPT) |

|

Core Function |

Uses predefined templates with placeholders for text generation. |

Uses machine learning models to generate human-like text. |

|

Data Handling |

Operates on structured data input and integrates it into templates. |

Capable of processing and generating text from both structured and unstructured data. |

|

Text Output |

Produces text strictly based on the structure and content of the templates. |

Generates more diverse and creative text, not confined to a specific template. |

|

Computational Resources |

Generally requires fewer computational resources. Suitable for environments with limited processing capabilities. |

Demands significant computational power for both training and inference, especially for larger models. |

|

Customization |

Highly customizable through the creation and modification of templates. |

Customization achieved through training on specific datasets or fine-tuning existing models. |

|

Implementation Cost |

Lower due to minimal computational requirements and no need for extensive data training. |

Higher, particularly for training custom models or using cloud-based services. |

|

Flexibility |

Limited to the variations allowed within the defined templates. |

Highly flexible, capable of generating a wide range of text types and styles. |

|

User Expertise Required |

Requires understanding of template syntax and structure. No machine learning expertise needed. |

Requires knowledge in machine learning and natural language processing, particularly for model training and fine-tuning. |

|

Interactivity |

Does not support interactive dialogue generation. |

Capable of engaging in interactive and context-aware dialogues. |

|

Use Cases |

Best suited for scenarios requiring structured, predictable text output, like form letters, reports, or configured customer responses. |

Ideal for applications needing creative, versatile text generation like chatbots, content creation, and interactive storytelling. |

|

Scalability |

Easily scalable within the confines of the template structures. |

Scalability depends on computational resources and model complexity. |

|

Data Privacy |

Can be operated locally, offering better control over data privacy. |

Often reliant on cloud-based solutions, which may raise data privacy concerns. |

Future Directions

As we consider the trajectory of Recursive Text Templates (RTT) technology, its future appears ripe with possibilities for enhancement and integration. These developments promise to expand the utility and efficiency of RTT in various domains.

Advanced Template Design and Management: Future iterations of RTT could see the development of more sophisticated template design tools, allowing for easier creation, modification, and management of templates. These tools could include AI-assisted suggestions for template optimization based on usage patterns and output effectiveness.

Integration with Natural Language Processing (NLP): Incorporating NLP capabilities could enable RTT systems to better understand and process user inputs, making them more adaptable in generating relevant text. This integration could bridge the gap between structured template use and the need for understanding nuanced language.

Enhanced Customization and Personalization: As user data becomes more available and analytics more advanced, RTT systems could be tailored to produce highly personalized content. This would be particularly beneficial in marketing and customer relationship management.

Automated Template Generation: Leveraging AI to automatically generate or suggest templates based on specific business needs or data types can streamline the process of setting up RTT systems, making them more accessible to users without technical expertise in template creation.

Conclusion

In summarizing this exploration into Recursive Text Templates (RTT), it's clear that while RTT may not boast the extensive capabilities of AI-driven models like GPT, it holds a distinct and valuable place in the realm of text generation. This technology, grounded in the principles of structured template use and data-driven content generation, offers a pragmatic and efficient solution in contexts where precision, predictability, and cost-effectiveness are key.

The examination of RTT's design, implementation, and practical applications reveals its aptitude for structured content generation across various business sectors. From automating customer service communications to generating precise financial reports, RTT demonstrates a level of reliability and control that is highly sought after in many professional environments. Furthermore, the potential synergies of RTT with AI and machine learning technologies hint at a future where the boundaries of text generation are further expanded, marrying the structure and efficiency of RTT with the dynamism and adaptability of AI.

Looking forward, the impact of RTT is poised to grow, especially as businesses continue to seek out technologies that balance sophistication with practicality. In an era where data is abundant and the need for personalized, context-specific content is ever-increasing, RTT stands as a testament to the importance of tailored solutions. It underscores the notion that in the field of text generation, one size does not fit all; different contexts require different approaches.

In closing, RTT, with its unique approach to text generation, embodies a significant stride in our ongoing quest to harness technology for effective communication. Its future, interwoven with advancements in AI and machine learning, is not just promising but also indicative of the evolving landscape of technological solutions tailored to specific business needs. As we continue to explore and refine these tools, the potential for innovation and efficiency in text generation seems boundless.

.png&w=640&q=75)