Introduction

Over the past several years, the nature of construction projects has undergone significant changes: the share of megaprojects is increasing, regulatory agendas are becoming more complex (including decarbonization, sustainable materials, and cybersecurity), and supply chains are becoming global and unstable. The result is high uncertainty in schedules and budgets: an analysis of 532 capital-intensive assets conducted by McKinsey showed an average cost overrun of 79% and a schedule delay of 52%. Meanwhile, global construction spending in the United States alone has already exceeded $2 trillion. It continues to grow at double‑digit rates, intensifying pressure on design teams and demanding fundamentally new tools to reduce early‑stage errors [1, 2].

Against this backdrop, artificial intelligence is rapidly evolving from an experimental technology to a commonplace component of AEC specialists’ workflows. According to a global survey by Arup, 42% of architects, engineers, and urban planners in the United States already utilize AI daily [3]. Research by Deltek indicates an increase in corporate AI solution penetration from 38% to 53% in just one year [4]. These figures reflect not so much hype as genuine demand for tools capable of generating optimal layouts in minutes, calculating carbon footprints, or predicting risks – thereby addressing the challenges of increasingly complex projects and skilled‑labor shortages.

Materials and Methodology

This study on the application of generative AI in optimizing design solutions for construction is based on an analysis of 19 sources, including industry reports, global expert surveys, academic articles, and company case studies. The theoretical foundation comprises works on integrating AI modules into BIM environments and AEC practice: the Autodesk report [10] projects the evolution of the Gen‑AI + BIM pairing; Arup [3] and Deltek [4] provide data on AI‑tool adoption in design processes; and Du et al. [9] describe the Text2BIM framework, which translates textual requirements into executable code for the Revit API. Additionally, studies by He [12] and Thampanichwat et al. [13, p. 872] laid the groundwork for a comparative approach to generative algorithms in urban planning and architecture.

Methodologically, the work integrates several complementary stages. First, a comparative analysis of the prominent families of generative models was conducted – diffusion networks (ArchiDiff [5, p. 104275], Daylight‑Diffusion [6]), GAN architectures (topology optimization [7, p. 105265], a surrogate model for insolation validation [11, p. 113876]), deep reinforcement learning methods (the SOgym framework [8]), and LLM agents (Text2BIM [9]). For each algorithm category, key metrics were collected, including the average geometric error when compared to BIM benchmarks, the daylight factor increase, the structural mass decrease, and the compliance magnitude, as well as FID/R² indicators in urban planning modeling.

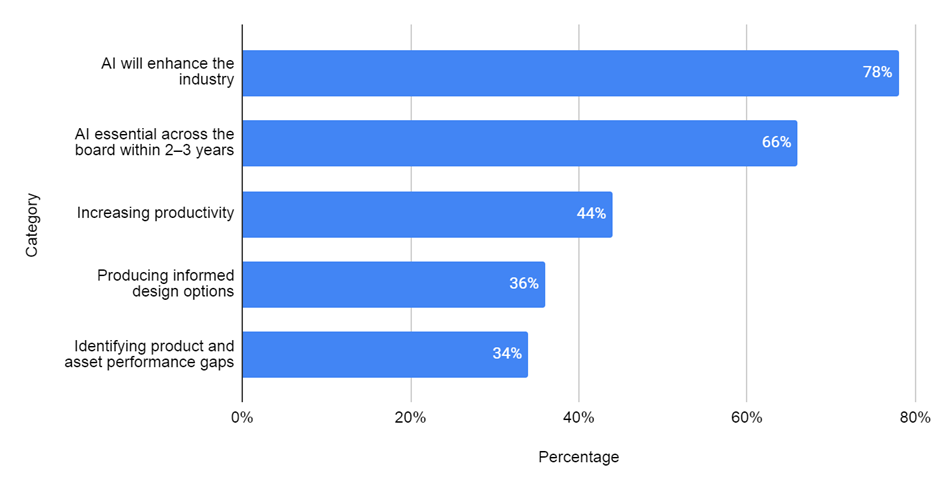

Next, a quantitative review of industry reports and studies concerning AI implementation in AEC was undertaken. We tested the dynamics of AI adoption in the United States (42% daily users per Arup [3], growth from 38% to 53% penetration in Deltek [4]) and the forecast of 78% of executives for standardization of Gen‑AI + BIM by Autodesk [10] against actual experimental results. This revealed a correlation between corporate readiness levels and algorithmic efficiency.

Third, through content analysis of case studies and field experiments, the practical effectiveness of platforms such as Deep Q-Material [15, p. 7207], ALICE [16], Scan-to-BIM [17, p. 104289], and examples from SHAIPES.ai [18] and ICON [19] was evaluated. The comparative review results indicate that Deep Q‑Material reduces average cost‑prediction error to 20.8% [15, p. 7207], and the integration of ALICE with P6 yields a 17% reduction in project durations [16]. Case analyses of constructions in West Texas and Austin confirmed the transfer of computational savings into material and time resources [18, 19].

Results and Discussion

Generative artificial intelligence encompasses several algorithmic families, including diffusion models, generative adversarial networks, deep reinforcement learning methods, and large language models. All of these generate new design variants, rather than merely evaluating predefined solutions, which fundamentally expands the search space. For instance, the ArchiDiff diffusion network demonstrates the ability to reproduce complex spatial building forms with minimal geometric error relative to control BIM benchmarks [5, p. 104275]. Meanwhile, the Daylight-Diffusion model, designed for daylighting analysis, adjusts the façade so that the daylight factor increases without altering the glazing area [6]. GAN‑based approaches applied to topology optimization reduce the mass of load‑bearing elements while preserving stiffness criteria [7, p. 105265]; in turn, the SOgym RL framework yields designs whose compliance deviates by less than 54% from classical gradient‑based search, with the agent learning entirely via simulated experience – liberating engineers from manually tuning hundreds of parameters [8]. At long last, LLM agents are starting to act as general managers of information and instructions. The Text2BIM design setup converts plain words into usable Revit API codes, creating a 3D model with intelligent details for areas and MEP components [9]. Together, these tools allow not only speeding up regular jobs but also moving from set design to the likely best choices.

The key point of integration for generative AI in AEC practice remains the information modeling environment. The BIM platform provides a formalized set of geometry, materials, and regulatory constraints. At the same time, Gen‑AI acts as an overlay that generates and instantaneously verifies alternatives within the same digital data container. A similar effect is observed in corporate solutions: according to the Autodesk State of Design & Make 2024 report, 78% of executives expect the AI‑module‑plus‑BIM paradigm to become standard within the next two to three years, since it enables seamless parameter exchange between the concept, detailing, and construction phases, as illustrated in figure 1 [10].

Fig. 1. Distribution of AI Impact and Use Cases in the AECO Industry [10]

Unlike traditional parametric modeling, in which the designer rigidly defines rules and manually adjusts variable ranges, generative AI relies on probabilistic distributions trained on large samples of completed projects or synthetic simulations. Parametric methods require prior knowledge of the underlying logic (for example, the relationships between loads and cross-sections). In contrast, generative AI extracts such logic from data, permits stochastic search, and can simultaneously account for dozens of conflicting criteria. Thus, generative AI does not replace parametric tools but rather extends them: BIM remains the repository and verification mechanism, parametrics provide a transparent layer of deterministic rules, and Gen‑AI adds a layer of statistical creativity that closes the gap between human intuition and computational power.

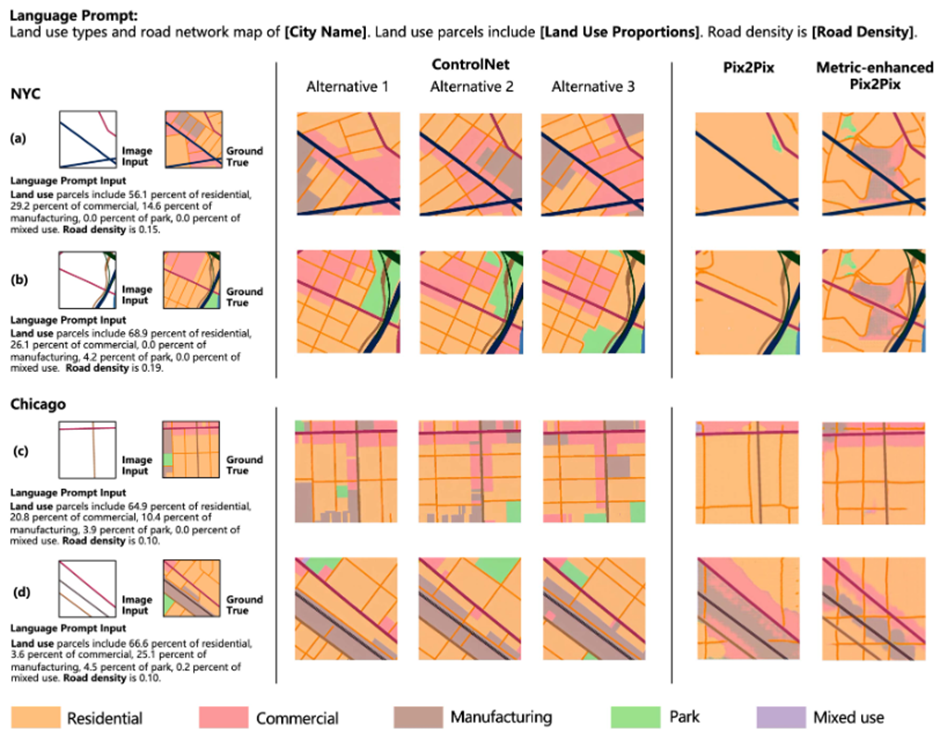

Generative methods have already demonstrated practical benefits in urban planning and architectural analysis, as diffusion networks trained on satellite imagery and BIM context automatically iterate through bulk-mass options and immediately verify isolation. Validation on a sample of thirty office blocks demonstrated that a GAN-based surrogate model increases the proportion of usefully daylit areas at a constant glazing area, reducing calculation time from hours to seconds via ray-tracing [11, p. 113876]. At a larger scale – districts and neighborhoods – a stepwise ControlNet framework is employed: first generating the road network and zoning, then the building volumes, and finally the visualization. Across three metrics (FID, compliance with instructions, and diversity), it outperformed the enhanced Pix2Pix baseline. For example, accuracy in meeting specified road‑density requirements rose to R² = 0.92 in New York versus 0.80 for the improved Pix2Pix, confirming the AI’s ability to uphold urban‑planning constraints rather than merely draw pretty pictures [12]. Results of Road Network and Land Use Planning Stage are presented in figure 2.

Fig. 2. Results of Road Network and Land Use Planning Stage [12]

At the conceptual design stage, practitioners increasingly use text‑to‑image systems for rapid first‑iteration façades and interiors. A 2025 case study in Buildings compared Midjourney, Stable Diffusion, and DALL·E, each model generating ten variants of mindful architecture from a single text prompt. This enabled architects to immediately proceed to semantic analysis of forms and formalize a style vocabulary. The authors note a sharp expansion of divergent search without noticeable loss of design control – engineers manually select and refine preferred options. At the same time, the model delivers a rapid flow of ideas, which is especially valuable under tight competition deadlines [13, p. 972].

In the engineering domain, the focus shifts to reducing structural mass and carbon footprint.The open RL benchmark SOgym demonstrated that a Dreamer V3 – based agent achieves topologies with compliance only 54% higher than the classical gradient optimum, yet does so without gradients and manually defined filters. This opens the way to train on real operational data and rapidly re-optimize when constraints change [8]. The industrial re-invention of a metal milling head proves in practice the promise held by topology optimization combined with additive manufacturing: approximately 10% mass savings with increased stiffness. This denotes how Gen-AI algorithms convert computational savings into direct material effects for detailing and serial production. These three applications – volumetric planning scheme generation, inclusive of climatic factors; accelerated building imagery creation; and topology optimization of load-bearing elements – compose an interoperable workflow in which each AI-generated data layer serves as the starting point for the next level of detailing and verification [14].

Continuing the optimization loop, once the load-bearing topology has narrowed the variation range, the next step is to automate the selection of materials and specifications. Generative algorithms compare thousands of combinations of cost data, carbon coefficients, and local regulations, using BIM as a unified quantitative database. In the Deep Q‑Material experiment, combining building attributes with BIM metadata reduced the average cost‑prediction error from 37.3% to 20.8% – a ≈ 44% improvement–because the model sees the actual distribution of volumes and product types rather than averaged parameters [15, p. 7207].

Once specifications are fixed, generative scheduling and logistics planning automatically allocate selected materials into time frames and resource constraints. The ALICE platform demonstrated that, when integrated with an existing P6 schedule, it generates hundreds of time‑effort scenarios, enabling the client to choose a balance between pace and cost: consolidated figures across a project portfolio show a 17% reduction in duration, 14% labor savings, and 12% equipment savings thanks to algorithmic sequencing of operations [16]. For early stages, when a detailed CPM network is not yet available, the Text‑to‑Schedule ML prototype generates a basic network plan directly from the IFC model.

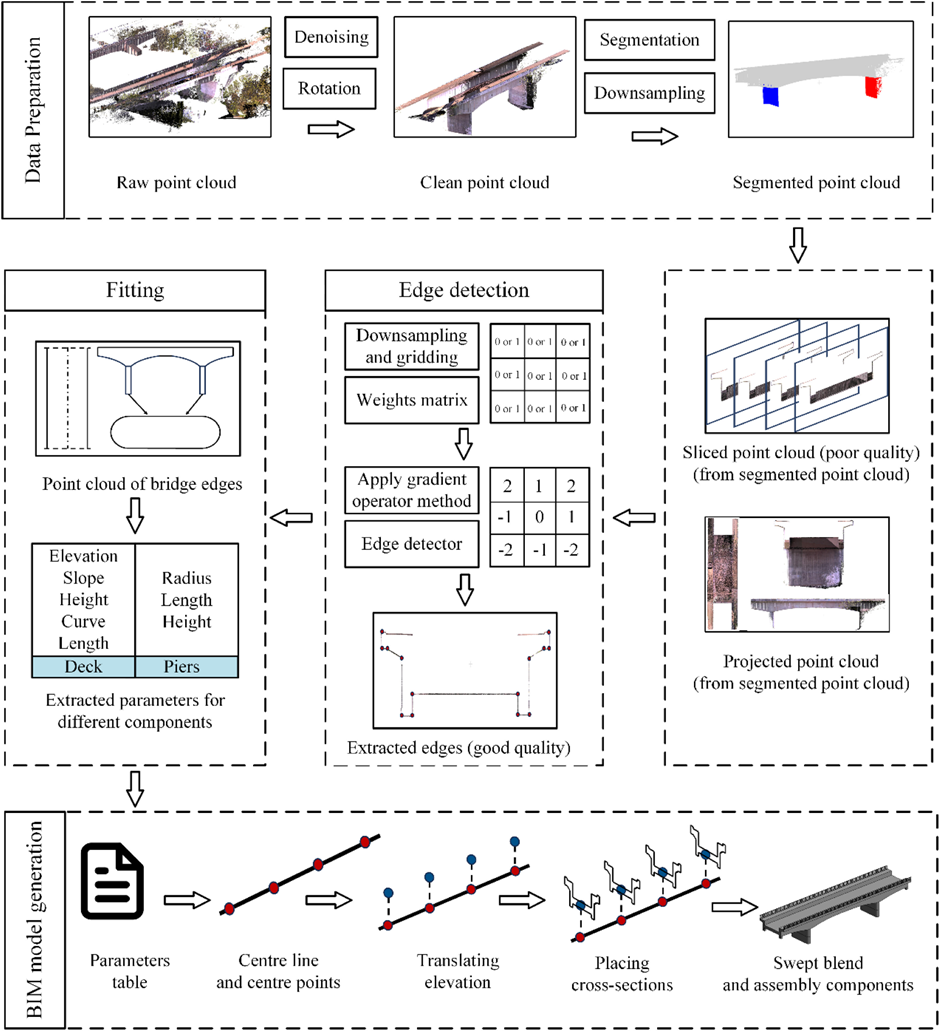

As the project moves to the construction site, updating the as‑built status becomes critical. A deep neural network that automatically converts point clouds into a semantic BIM by major element classes accelerates the issuance of the as‑built model by nearly one third compared to manual tracing [17, p. 104289]. The conversion algorithm is illustrated in figure 3. Such a digital twin is immediately fed back into the planning loop: detected deviations recalibrate the schedule and procurement volumes, and the generative agent proposes corrective scenarios to minimize accumulated delays.

Fig. 3. Process Flow for Bridge Component Modeling Using Point Cloud Data and BIM Generation [17, p. 104289]

Practical effects are especially pronounced in large‑scale residential construction, where every saved minute and dollar is multiplied by the volume of units. In West Texas, the startup SHAIPES.ai, which combines AI‑driven layout generation with expanded polystyrene panels, assembled its first house from 189 panels in six weeks, reducing the budget compared with a conventional framed structure [18]. In Austin, ICON applies its proprietary generative library, CODEX, alongside robotic concrete printing to bring a dozen two-story homes to market, where printing the shell takes only days and mid – $300k price points compete with standard developer offerings while delivering superior building-envelope energy performance [19]. Taken together, these cases demonstrate how generative AI closes the loop – from specifications and scheduling to as‑built condition and back – ensuring reliable savings of time, materials, and capital at scale.

Reliable deployment of generative intelligence begins with meticulous data preparation. Geometry, cost estimates, material classifiers, and regulations are often stored separately; thus, the initial task is to cleanse and harmonize them into standard units of measure and to verify early compliance with pertinent standards. Once element semantics are enriched with international classification codes and the BIM model is free of collisions, the principal reason pilot AI projects fail effectively disappears.

Next, one defines the metrics by which the system will evaluate its generated solutions. In construction practice, the basic set includes net present cost, total carbon footprint, and specific energy consumption. Firms that augment traditional financial metrics with environmental and operational indicators obtain more accurate feedback already at the conceptual stage, significantly improving the validity of investment forecasts and facilitating dialogue with regulatory authorities.

The generation process itself is structured as a cyclical interaction between the model and the human. The algorithm produces a suite of options, the expert marks the acceptable ones, and the system refines its preferences, narrowing the search space. This iterative approach simultaneously preserves the designer’s creative authority. It reduces the risk of hidden non‑conformities: statistical exploration is combined with domain logic, and each revision adds experiential knowledge to the model.

The final phase involves reintegration of the selected solution into the information-modeling environment. Architectures such as Text2BIM convert a free‑form technical brief into API calls for the design package, automatically construct a semantically layered three‑dimensional model, execute rule checks, and, in a few iterations, bring the project to a level suitable for subsequent detailed design. Because changes are made directly within the source IFC container, downstream cost, carbon, and scheduling systems immediately receive up‑to‑date data, thereby closing the continuous cycle of data → generation → validation → BIM.

Although the effectiveness of generative models has been proven, the accuracy of their results largely depends on the quality of the input data. Any uncertainty in geometry, wrong material classifiers, or missing regulatory parameters will immediately reflect as false optima. Even small attribute gaps can lead the algorithm to generate incorrect specifications, undercalculate structural loads, and distort delivery schedules. Thus, organizational discipline in collecting, validating, and updating data is another critical condition for the dependability of the whole digital workflow.

The second risk domain relates essentially to the nature of the algorithms. Most generative systems belong to black-box models, whose internal parameters cannot be easily interpreted using standard engineering validation approaches. This opacity makes it challenging to review load-bearing structures and energy-efficiency solutions and slows down the official certification procedure. Companies are increasingly conducting auxiliary explainability metrics, recording intermediate iteration steps, and creating sets of test cases that demonstrate how much the generated solution complies with established standards without full model disclosure.

Special attention must be paid to legal aspects. Generative intelligence combines data of diverse provenance, and questions of copyright over the results of such compilation remain unresolved. It is unclear who owns a unique architectural solution generated by a system based on third‑party images or code, and who bears responsibility for economic and technological risks if hidden errors cause damage to a built asset. While regulators currently treat AI as an assistive tool, the burden of liability effectively falls on the design team, necessitating transparent documentation of algorithmic steps and explicit recording of human decision points.

Finally, the road adoption of generative methods raises social concerns. Automation of routine tasks frees specialists for creative work, but simultaneously heightens anxiety over job displacement and devaluation of expertise. To balance benefits and potential repercussions, organizations are creating new roles at the intersection of IT and engineering, revising their training programs, and establishing frameworks for ethical use. In doing so, they carve out a context in which the algorithm remains a powerful assistant, while the human serves as the ultimate arbiter, retaining responsibility and creative leadership.

Conclusion

In light of the foregoing analysis, the application of generative artificial intelligence in building design represents not merely an evolution of tools but a qualitative leap in the methodology of finding optimal solutions. The integration of diffusion models, GAN architectures, deep-reinforcement methods, and large language models into the information-modeling environment transforms BIM platforms from passive data repositories into active centers of statistical creativity. This combination ensures a multi-criteria, iterative process: from the early generation of volumetric planning schemes with climate considerations to automated material selection, scheduling, and logistics, and then to real-time updating of as‑built models on the construction site.

Practical case studies – from adaptive façade design to topological optimization of load‑bearing elements and comprehensive project‑portfolio management – confirm that generative AI extends beyond accelerating routine computations to forming a complete digital loop of data → generation → validation → BIM. It minimizes overruns in both timeline and budget while enhancing the quality of the solution through multiple interrelated criteria- energy, environment, and economics. Proper deployment of generative AI is based on data trust and algorithm transparency. Preparation of geometry, material, and regulation inputs; unified element semantics; and clearly defined evaluation metrics setup is required to ready the setup for valid and reproducible results. At the same time, humans continue to play a key role in creative direction and final engineering decision‑making. Thus, generative artificial intelligence, in conjunction with the BIM environment, opens up new horizons for the comprehensive optimization of design processes in construction, reshaping the very nature of design and laying the groundwork for more sustainable, efficient, and adaptive building solutions.

.png&w=384&q=75)

.png&w=640&q=75)